Set Up a Katib Job¶

To create and configure a Katib job for hyperparameter tuning, you first need to Prepare Your Notebook. Once that is done, you are ready set up your Katib job.

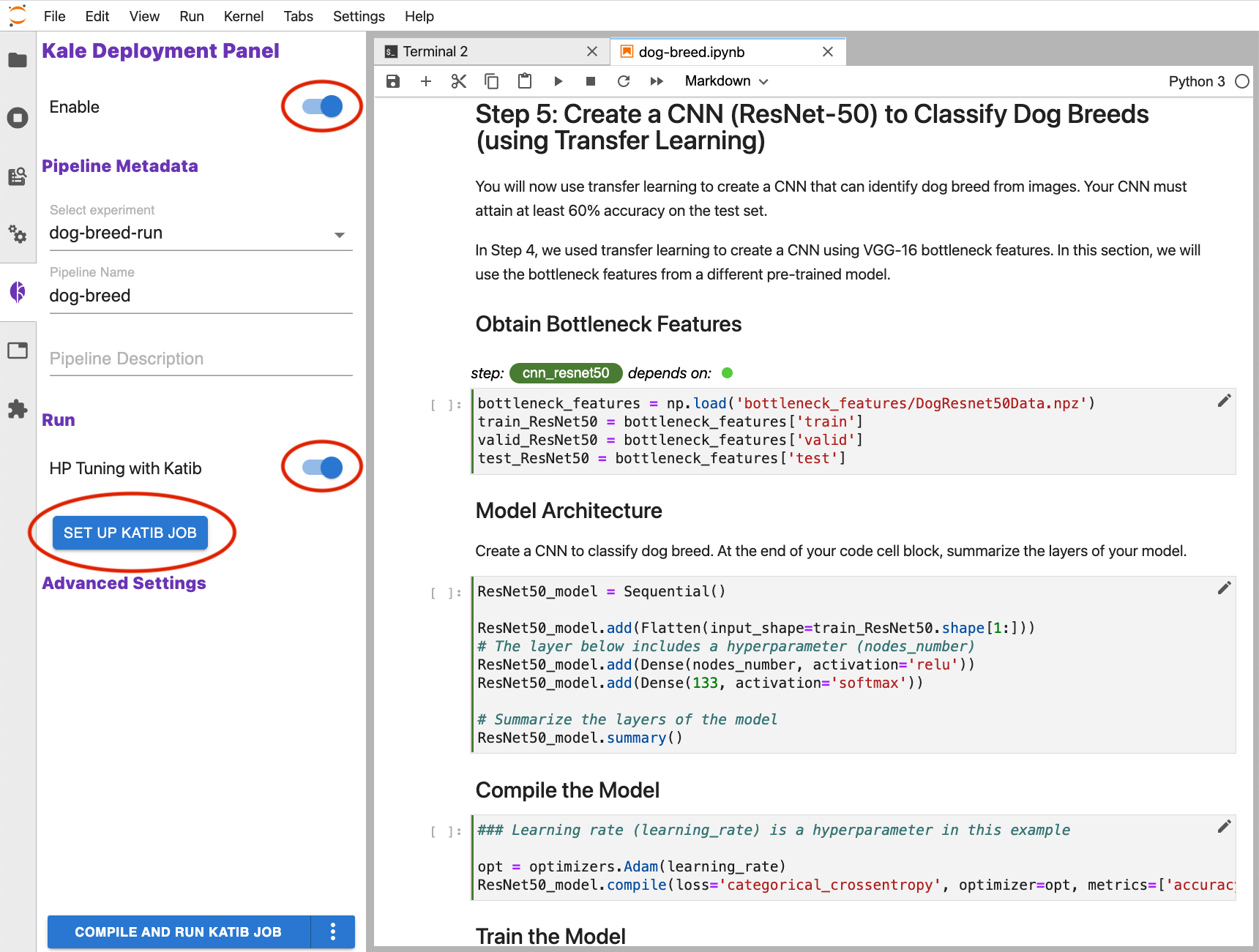

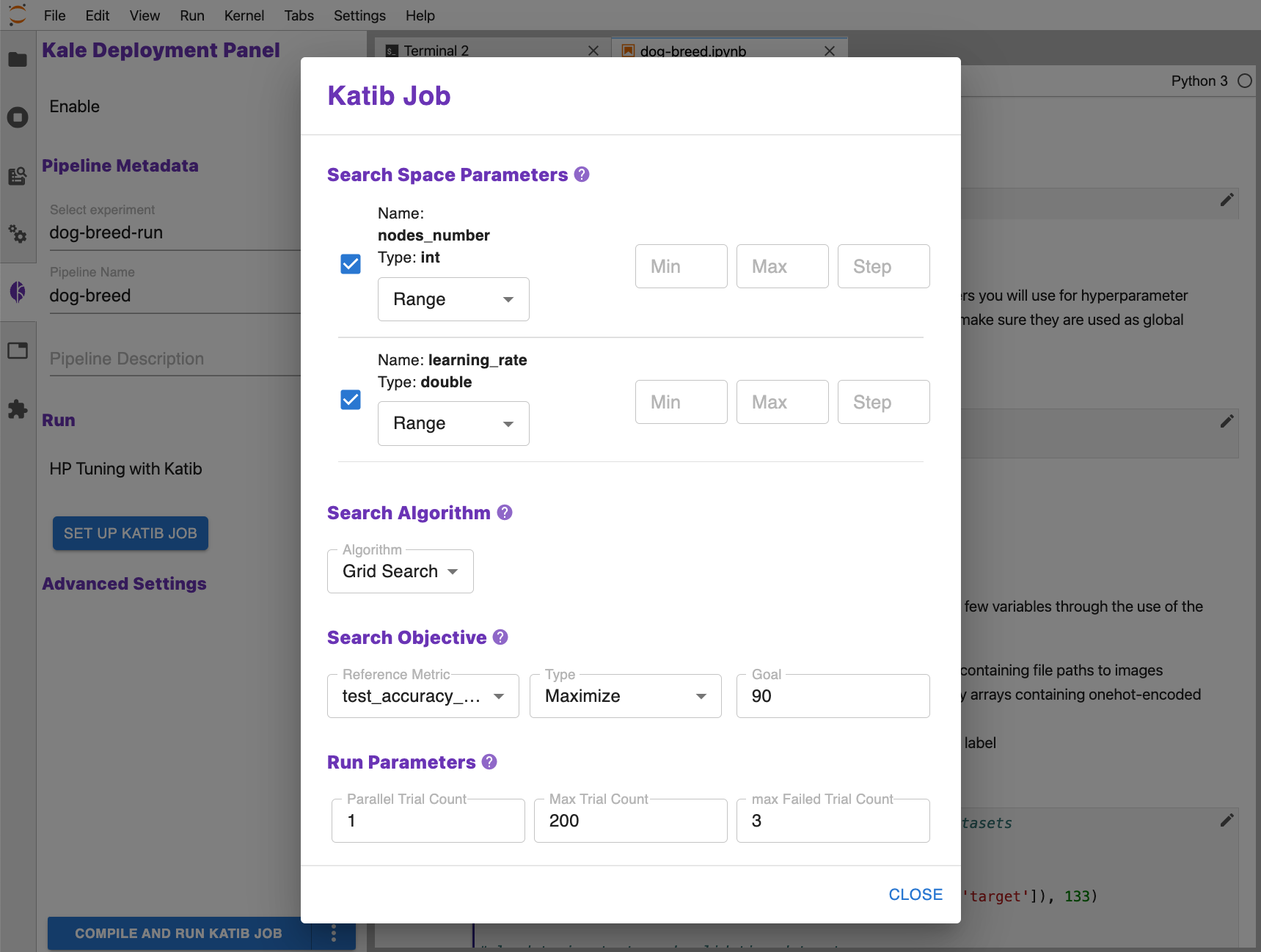

Open the Kale Deployment Panel and ensure that both Kale and Katib are enabled. Click SET UP KATIB JOB.

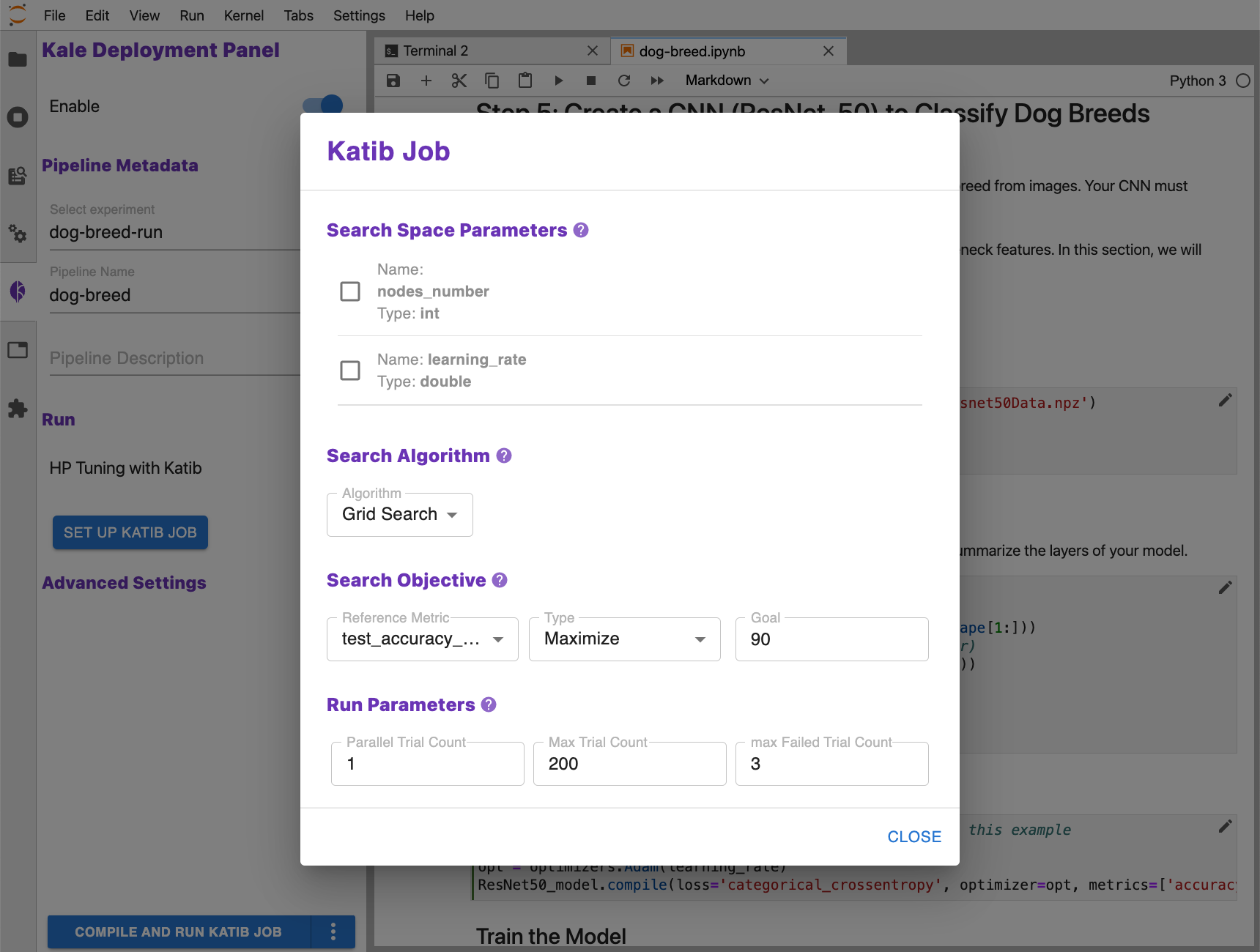

In the Katib Job modal that appears, configure the settings to set up hyperparameter tuning as desired.

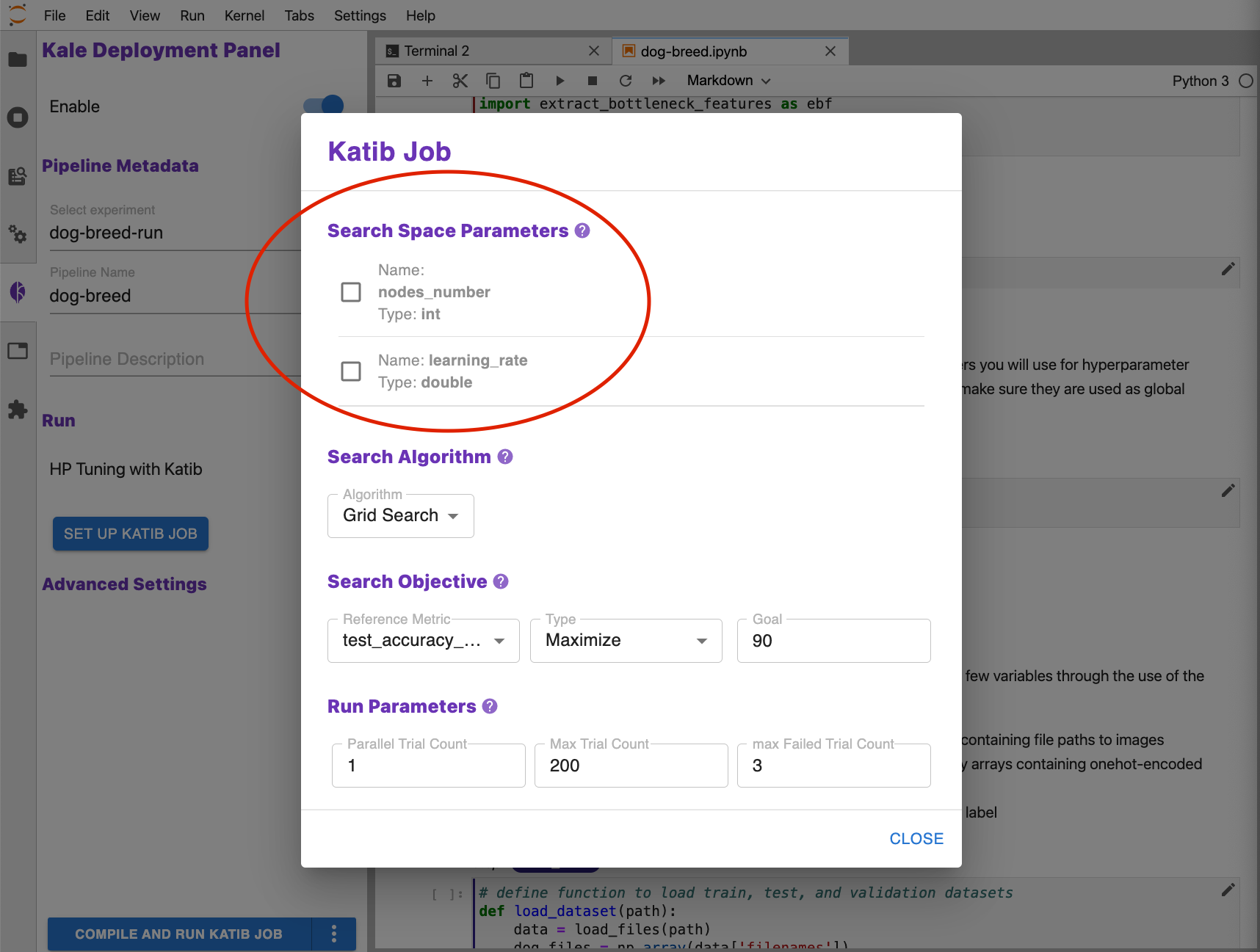

Search Space Parameters¶

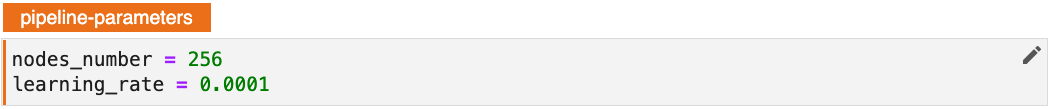

In preparing your notebook for hyperparameter tuning, you must organize a set of variables to use as hyperparameters in a single Pipeline Parameters cell. As an example, see the figure below.

Kale uses the pipeline paramaters to identify the search space parameters for a

Katib job. For example, based on the cell above, you would see the parameters

nodes_number and learning_rate in the Katib Job UI.

You may use any combination of search space parameters for hyperparameter tuning. To use a parameter, click the checkbox next to it.

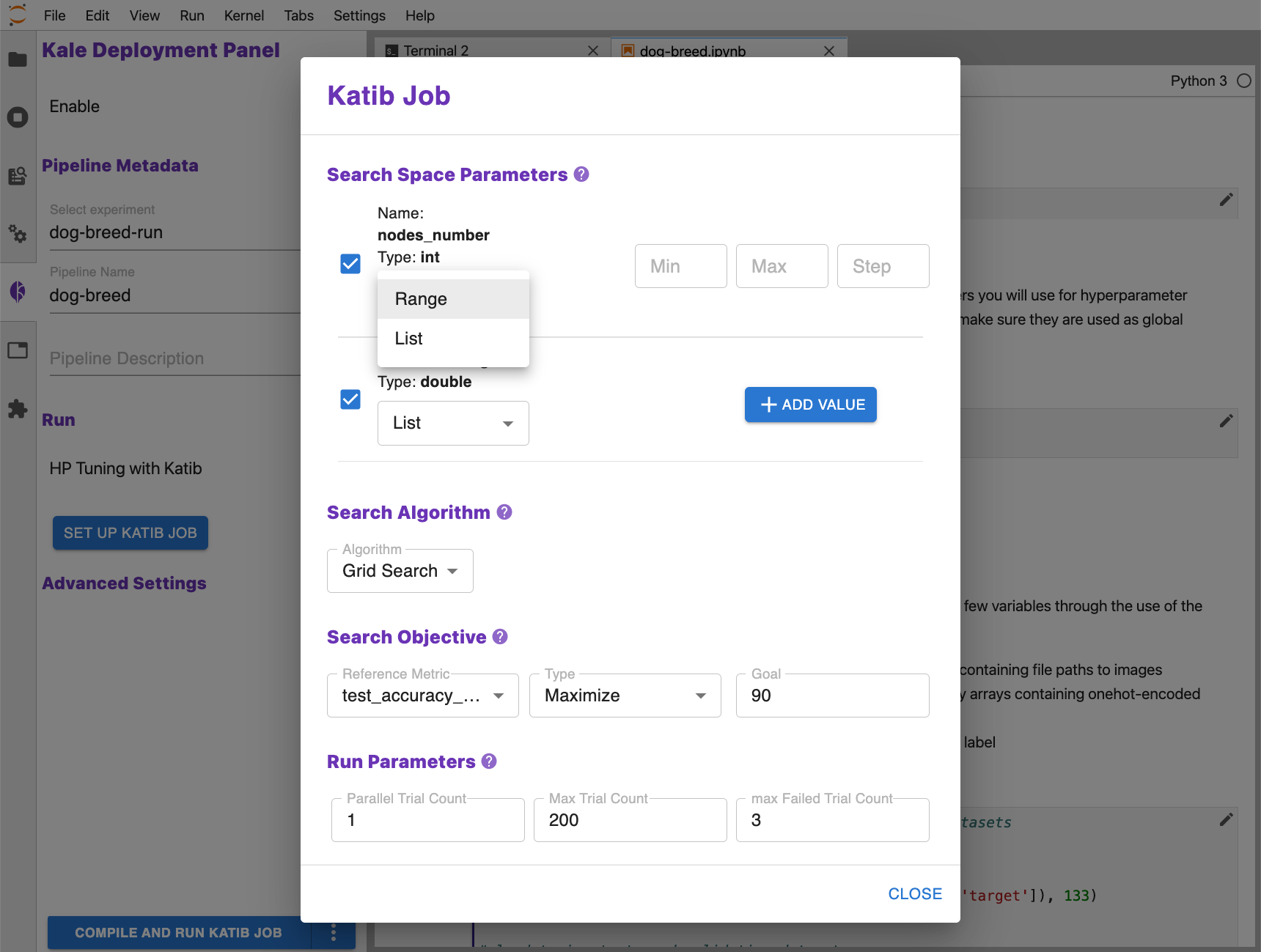

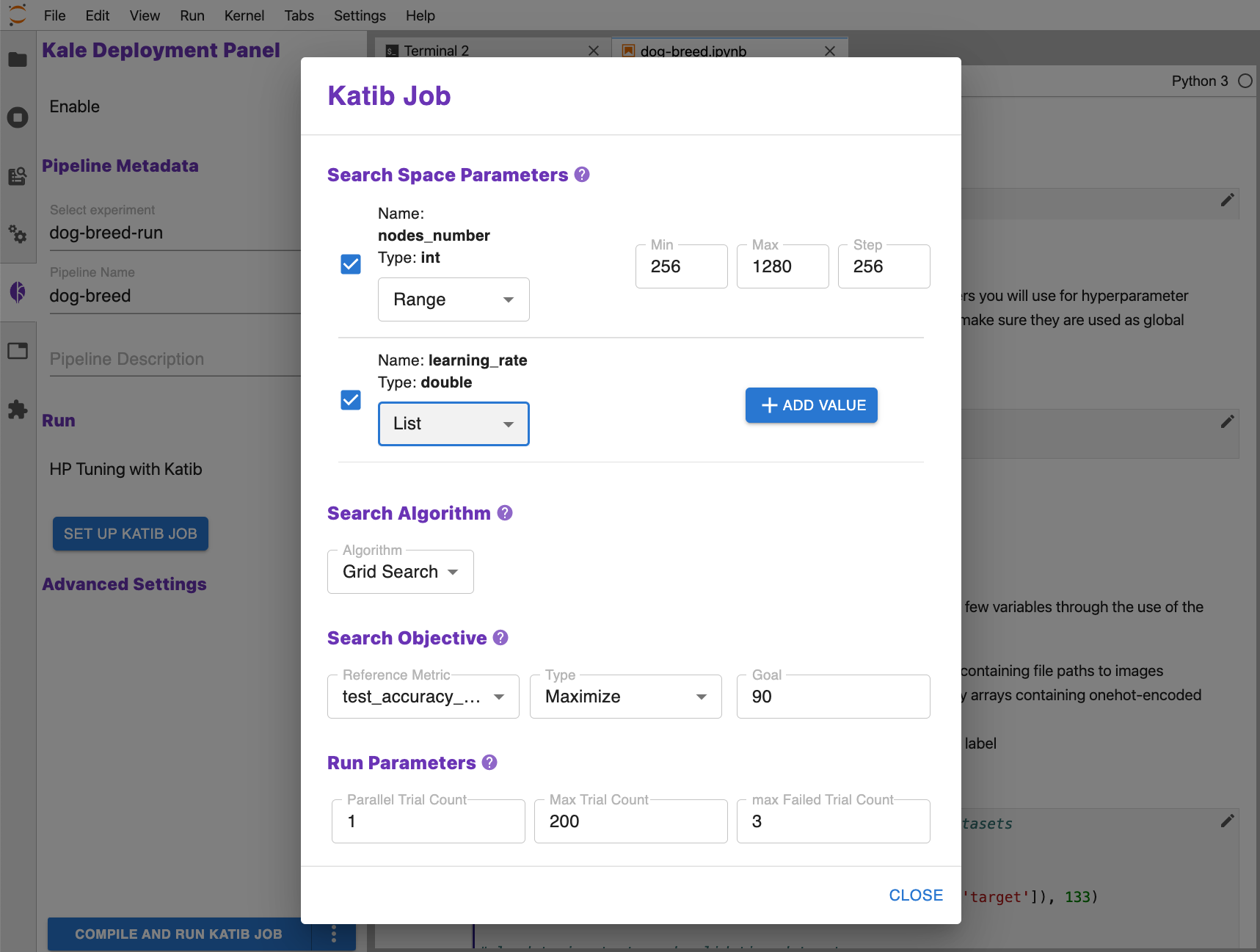

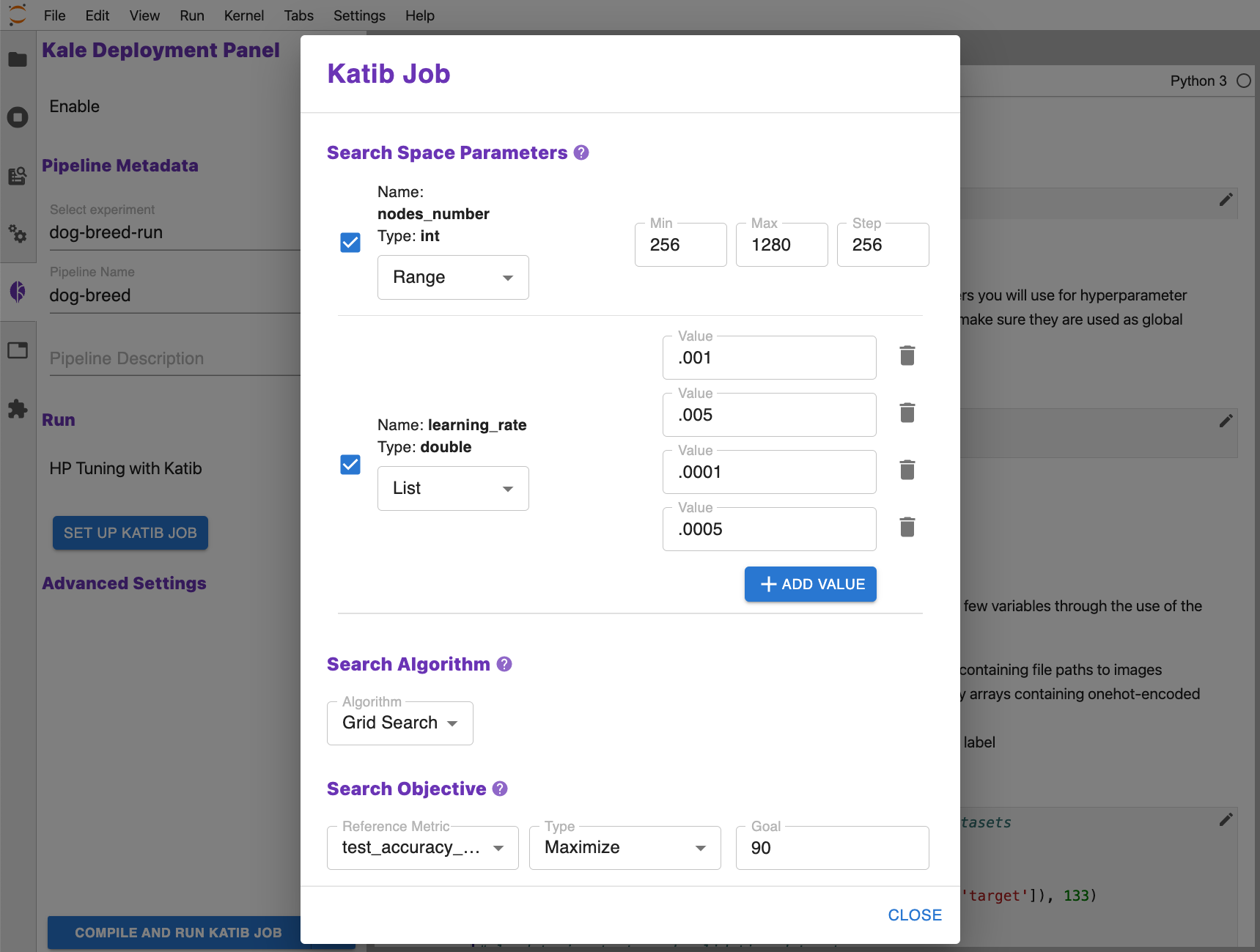

When running a job, Katib will explore a set of allowable values for the search space parameters (hyperparameters) in order to identify the values that lead to the best performance for your model. For each hyperparameter, you may specify a range or a list of allowable values.

For range parameters, enter values for Min, Max, and Step.

For list parameters, click the ADD VALUE button and enter a value. You may add as many values as you like to complete the list of allowable values for the parameter.

When the job is launched, Katib will create a series of pipeline runs to test allowable hyperparameter values based on the search algorithm you specify.

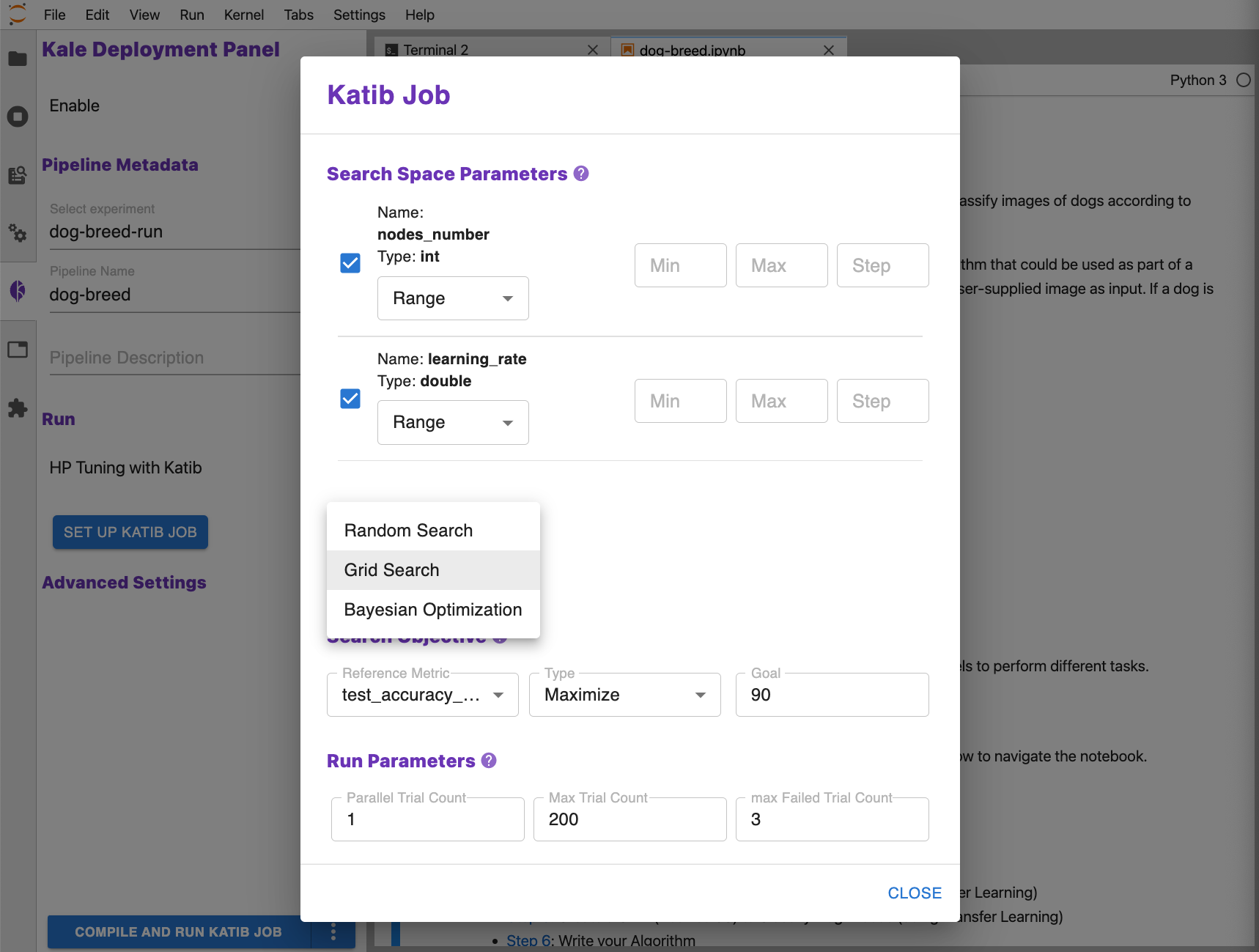

Search Algorithm¶

Katib uses one of several several search algorithms to optimize hyperparameter values. The search algorithm defines the method by which Katib will explore the space of possible values for hyperparameters. The goal is to optimize the Search Objective.

The choice of search algorithm is often driven by the need to keep the space of possible values that must be considered with some bounds defined by your time and computational constraints.

Katib enables you to select from the following search algorithms.

Grid Search¶

Grid search is useful when all variables are discrete (not continuous) and the number of possible values is low. A grid search performs an exhaustive search over all combinations of values in the defined list or range for each search space parameter. This can result in a long search process even for medium-sized problems. Katib uses the Chocolate optimization framework for grid search.

Random Search¶

Random sampling is an alternative to grid search and is used when the number of discrete variables to optimize is large and the time required for each evaluation is long. When all parameters are discrete, random search performs sampling without replacement. Random search is therefore the best algorithm to use when exhaustive exploration is not possible. If the number of continuous variables is high, you should use quasi-random sampling instead. Katib uses the Hyperopt, Goptuna, Chocolate or Optuna optimization framework for random search.

Bayesian Optimization¶

The Bayesian optimization method uses Gaussian process regression to model the search space. This technique calculates an estimate of the loss function and the uncertainty of that estimate at every point in the search space. The method is suitable when the number of dimensions in the search space is low. Since the method models both the expected loss and the uncertainty, the search algorithm converges in a few steps, making it a good choice when the time to complete the evaluation of a parameter configuration is long. Katib uses the Scikit-Optimize (skopt) or Chocolate optimization framework for Bayesian search.

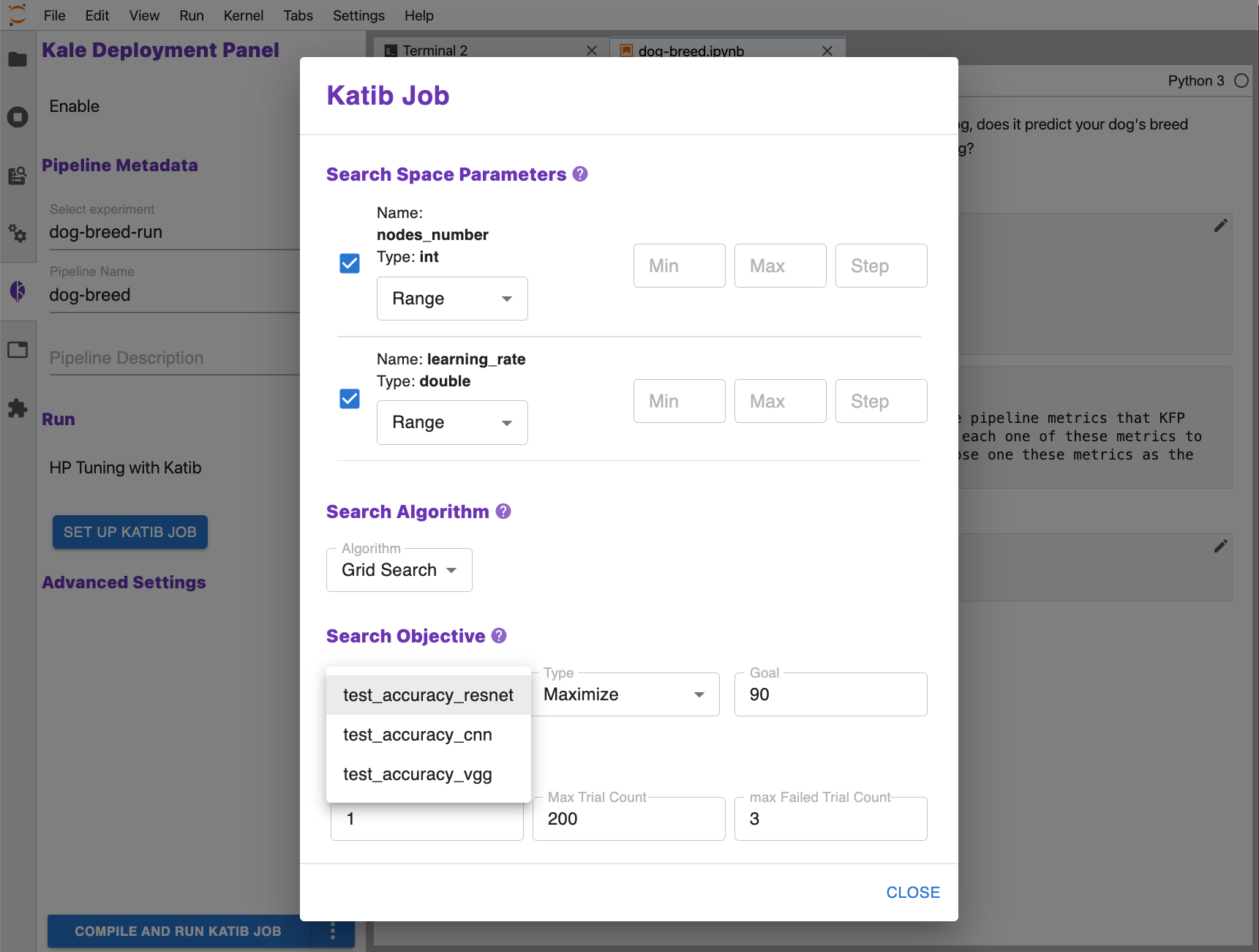

Search Objective¶

The search objective is the metric that you are optimizing. The optimal hyperparameter values are those that result in the best value for the search objective.

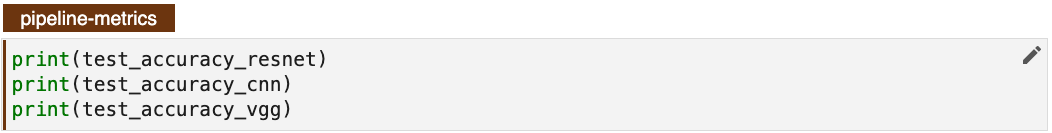

In preparing your notebook for hyperparameter tuning, you must organize one or more variables in a single Pipeline Metrics cell. As an example, see the figure below.

Reference Metric¶

Kale uses the pipeline metrics to identify the variables that you may select

from as the reference metric of the search objective. For example, based on the

cell above, you would see the options test_accuracy_resnet,

test_accuracy_cnn, and test_accuracy_vgg as options for

Reference Metric.

Type¶

As the type for the search objective, you may select from Maximize or Minimize to control how Katib compares the results of trials.

Goal¶

You may specify a goal value for the search objetive. This provides a target for the search algorithm. If a trial exceeds this value in either minimizing or maximizing the reference metric, Katib will conclude the experiment and report the hyperparameter values that resulted in the value that exceeded the metric.

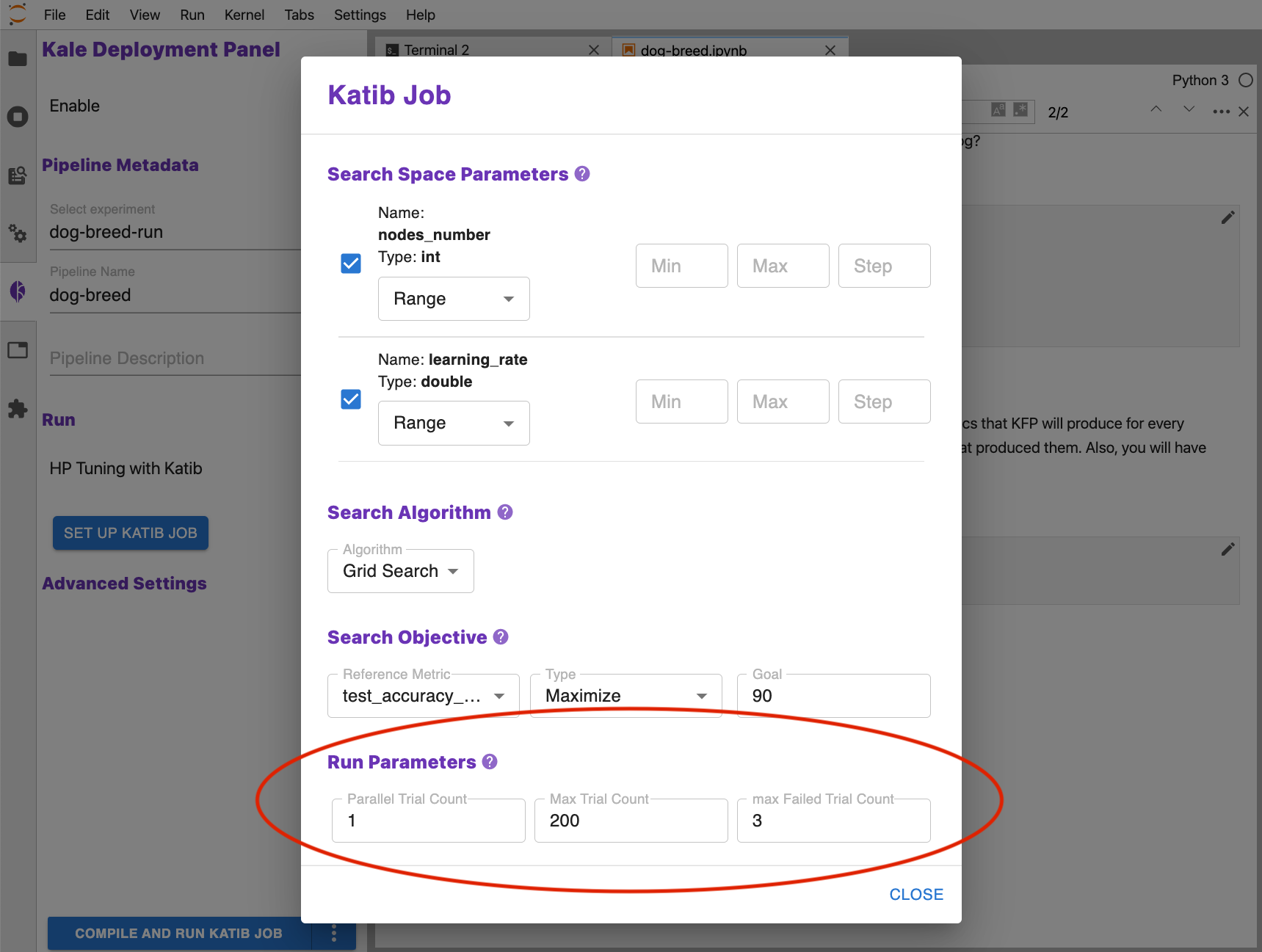

Run Parameters¶

Run parameters put boundaries on a Katib job.

Parallel Trial Count¶

Set Parallel Trial Count to specify the maximum number of trials that Katib should run in parallel. The default value is 1.

Max Trial Count¶

Set Max Trial Count to specify the maximum number of trials to run. This is equivalent to the number of hyperparameter sets that Katib should generate to test the model. If you do not set a Max Trial Count value, your experiment will run until the goal for the search objective is reached or the experiment reaches a maximum number of failed trials.

max Failed Trial Count¶

Set max Failed Trial Count to specify the maximum number of

failed trials Katib should permit before it stops the experiment. This

is equivalent to the number of failed hyperparameter sets that Katib should

test. If the number of failed trials exceeds

max Failed Trial Count, Katib stops the experiment with a

status of Failed.