Configure Kubernetes Spec for Model¶

In this section, you will set Kubernetes configurations on a trained Machine Learning (ML) model you will serve with Kale and KFServing. You are going to configure the Kubernetes spec and metadata for the InferenceService and its underlying resources.

Overview

What You'll Need¶

- An Arrikto EKF or MiniKF deployment with the default Kale Docker image.

- An understanding of how you can serve a model with Kale.

Procedure¶

Create a new Notebook server using the default Kale Docker image. The image will have the following naming scheme:

gcr.io/arrikto/jupyter-kale-py36:<IMAGE_TAG>

Note

The

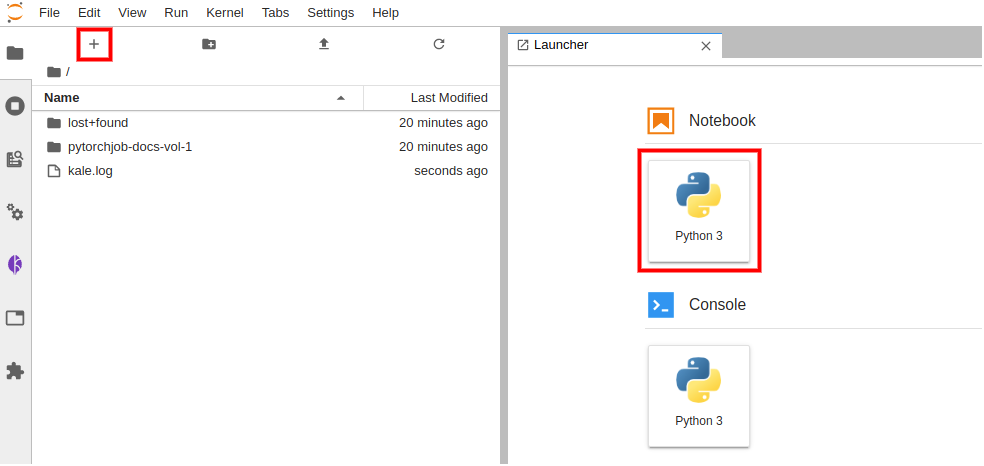

<IMAGE_TAG>varies based on the MiniKF or Arrikto EKF release.Create a new Jupyter Notebook (that is, an IPYNB file):

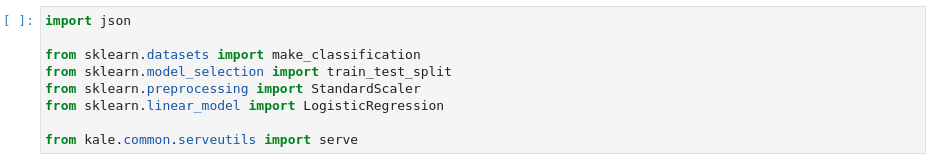

Copy and paste the import statements in the first code cell, then run it:

This is how your notebook cell will look like:

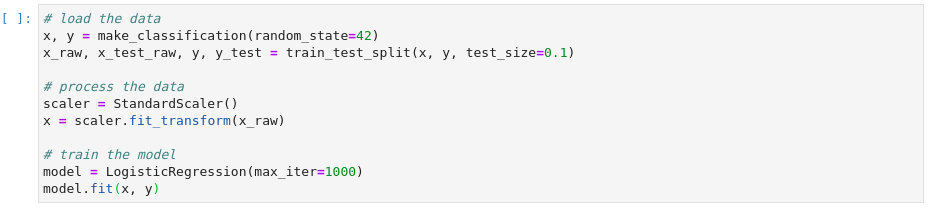

In a different code cell, prepare your dataset and train your model. Then, run it:

This is how your notebook cell will look like:

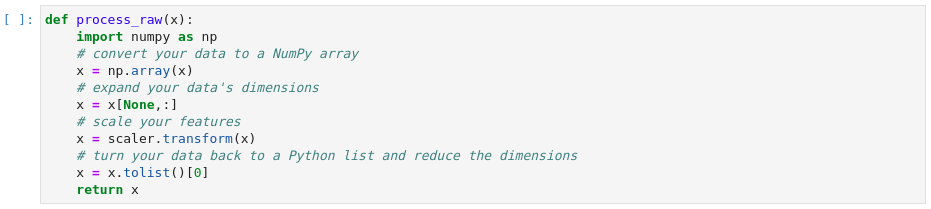

Define the data processing function that you want to turn into a KFServing transformer component in a different code cell and run it:

This is how your notebook cell will look like:

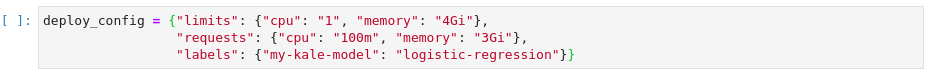

Specify your desired Kubernetes configuration for the deployment of your model in a different code cell and run it:

This is how your notebook cell will look like:

Note

To find out which are all the available configurations you can specify, take a look at the DeployConfig API.

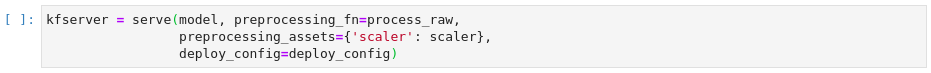

Call the

servefunction and pass the trained model, the preprocessing function, its dependencies, and the deployment configuration as arguments. Then, run the cell:This is how your notebook cell will look like:

Important

The KFServing controller uses some default values for

limitsandrequeststhat the admin sets, unless you explicitly specify them. At the same time, Kubernetes will not schedule pods when theirrequestsexceed theirlimits.To ensure that the resulting resources will have valid specs, whenever you set either one of

limitsorrequestsmake sure to specify some value for the other one as well, following the aforementioned restriction. If you provide a value just forrequestswhich exceeds the defaultlimits, Kubernetes will not schedule the resulting Pods.The

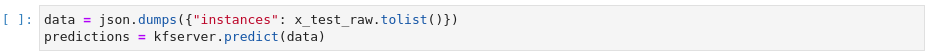

deploy_configargument accepts either adict, which Kale will use to initialize aDeployConfigobject, or aDeployConfigdirectly.Invoke the server to get predictions in a different code cell and run it:

This is how your notebook cell will look like:

Summary¶

You have successfully served and invoked a model with Kale, specifying custom Kubernetes configurations for the corresponding resources.

What's Next¶

Check out how you can invoke an already deployed model.