Invoke Existing InferenceService¶

In this section, you will query an existing KFServing InferenceService for predictions using Kale helpers.

Overview

What You'll Need¶

- An Arrikto EKF or MiniKF deployment with the default Kale Docker image.

- An InferenceService deployed in your namespace, for example, the one created after completing the Serve Model from Notebook guide.

Procedure¶

Note

The procedure below serves as an example for the InferenceService you deployed in the Serve Model from Notebook guide. If you want to use your own InferenceService, adjust the code accordingly.

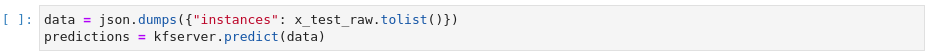

Navigate to the Models UI to retrieve the name of the InferenceService. In our example, this will be

kale-serving.

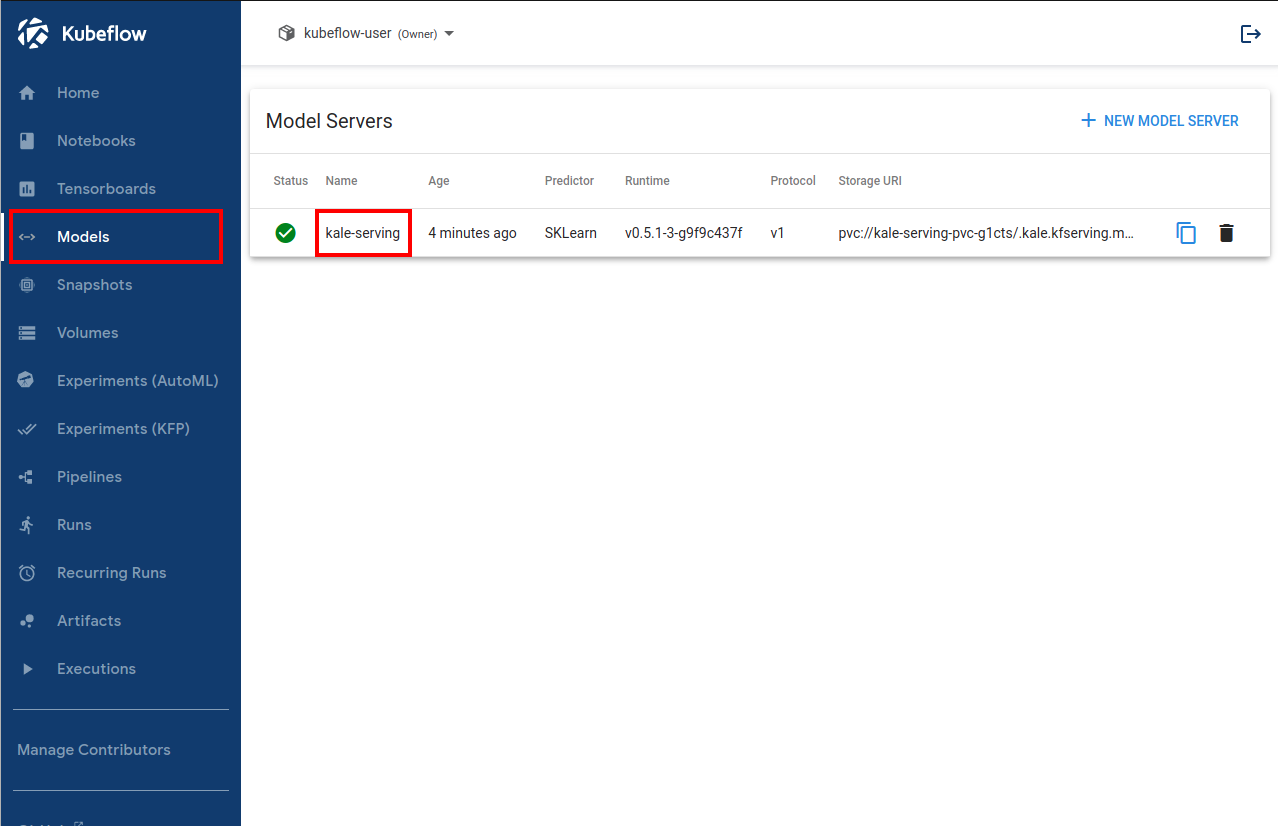

Create a new Notebook server using the default Kale Docker image. The image will have the following naming scheme:

gcr.io/arrikto/jupyter-kale-py36:<IMAGE_TAG>

Note

The

<IMAGE_TAG>varies based on the MiniKF or Arrikto EKF release.Create a new Jupyter Notebook (that is, an IPYNB file):

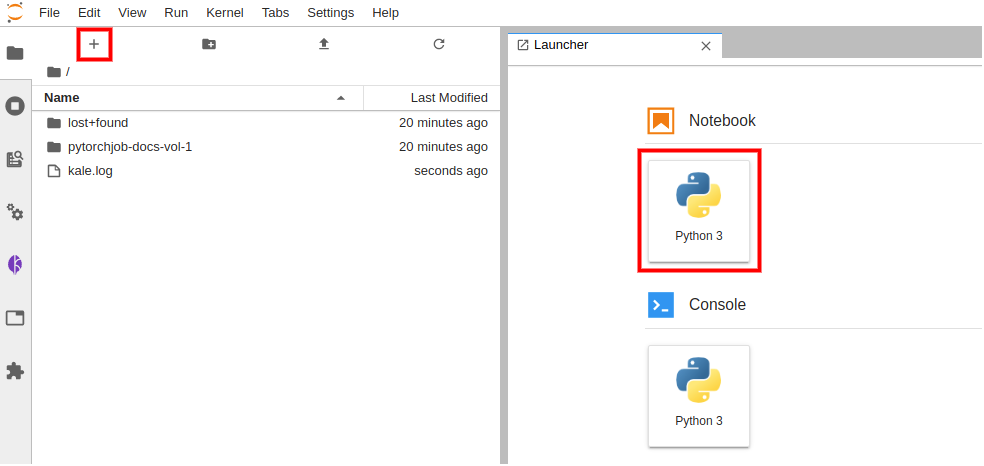

Copy and paste the import statements in the first code cell and run it:

This is how your notebook cell will look like:

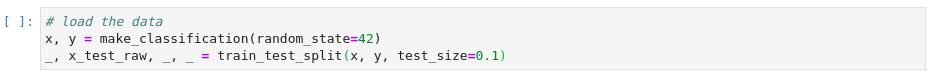

In a different code cell, load the data you will use to get predictions. Then, run the cell:

This is how your notebook cell will look like:

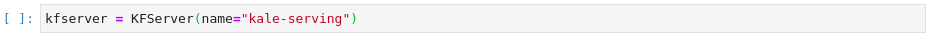

In a different code cell, initialize a Kale

KFServerobject using the name of the InferenceService you retrieved during the first step. Then, run it:Note

When initializing a

KFServer, you can also pass the namespace of the InferenceService. If you do not provide one, Kale assumes the namespace of the Notebook server.This is how your notebook cell will look like:

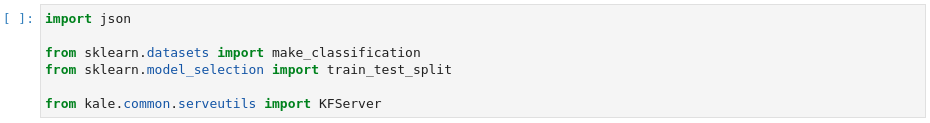

Invoke the server to get predictions in a different code cell and run it:

This is how your notebook cell will look like: