Invoke Existing InferenceService with External Client¶

In this section, you will query an existing KFServing InferenceService for predictions using an external client.

Overview

What You’ll Need¶

- An existing EKF deployment.

- An exposed Serving service.

- An exposed TokenRequest API.

- A ready InferenceService (see Serve Model from Notebook).

- A service account token for an external client.

- A Python environment with latest Arrikto wheels installed.

Procedure¶

Store the ServiceAccount token you have acquired in a local file. For example

serving.token.Start a Python3 kernel in your working environment:

user@local:~$ python3Prepare the data you will use to query your model and store them in the

model_datavariable:>>> model_data = [[0.24075317948856828, -0.8274159585641723, -0.20902325728602528, 0.38115838488277776, 1.2897527540827456, 0.8862935614189356, 0.5885784044206096, -0.8505204542093001, 2.601683114180395, 0.5655096456315442, 0.6653007744902968, 0.3088330125989638, -0.5805234498047227, -1.7607627591558177, 1.702214944635238, 0.5257689308069179, 0.7533416211045325, -1.2242982362893657, 0.6731813512699584, -0.13845598398377382]]Note

This input is tailored to the Serve Model from Notebook example.

Specify the token itself or a URI to the file where you have stored the token:

>>> token = "file:serving.token"Specify the URL where the Kubernetes API is exposed for your cluster:

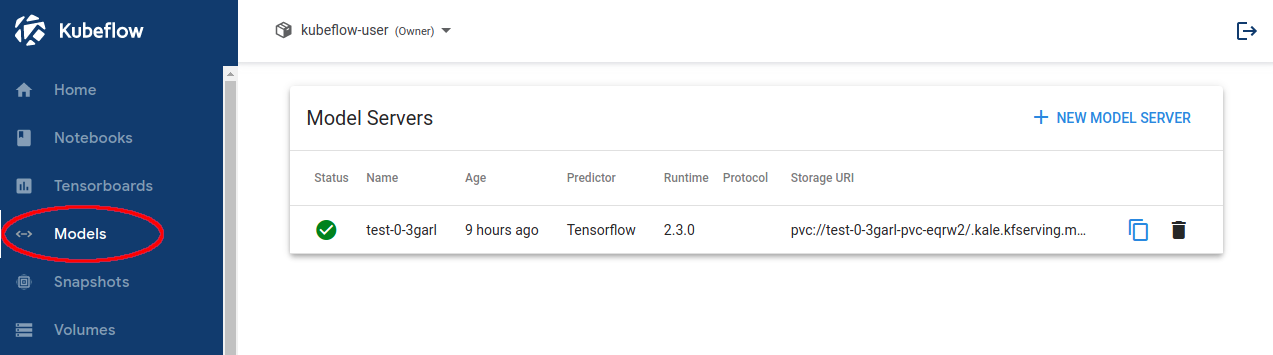

>> kubernetes_host = "https://arrikto-cluster-serving.serving.example.com/kubernetes"Navigate to the Models UI:

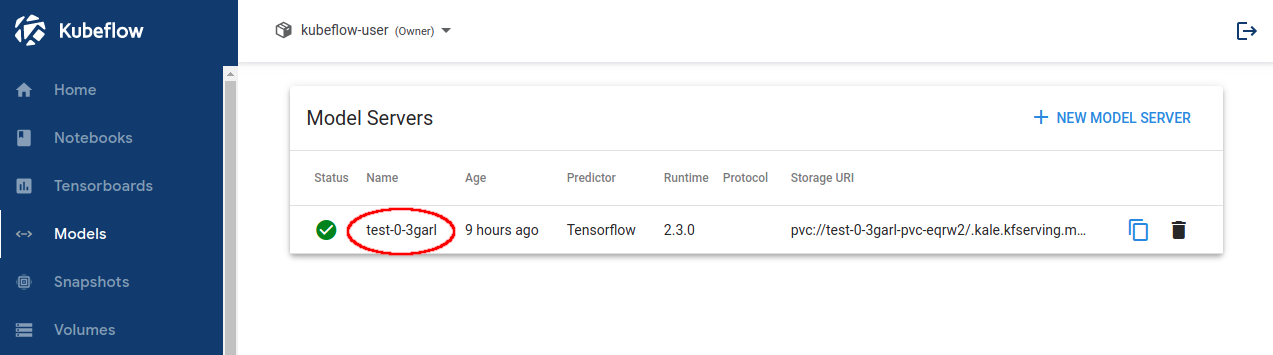

Navigate to the details of the model you want to invoke by clicking on the name of the model:

Specify the name of your model:

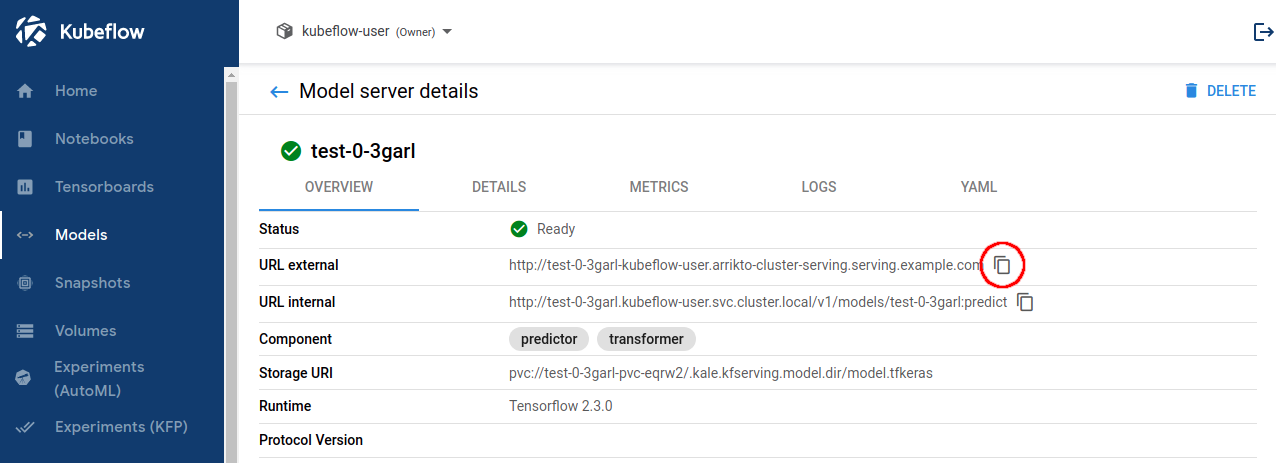

>>> model_name = "test-0-3garl"Copy the URL external field:

Specify the URL of your model by pasting the URL external of the model.

>>> model_url = "https://test-0-3garl-kubeflow-user.arrikto-cluster-serving.serving.example.com"Important

Use

httpsinstead ofhttp.Prepare the URL and data for the request:

>>> import json >>> import requests >>> data = json.dumps({"instances": model_data}) >>> predict_url = "%s/v1/models/%s:predict" % (model_url, model_name)Important

This assumes that your model supports Data Plane v1

Initialize the authentication mechanism with ServiceAccount tokens for Kubernetes:

>>> from rok_kubernetes.auth import ServiceAccountAuth >>> auth = ServiceAccountAuth(sa_token=token, kubernetes_host=kubernetes_host, verify=True)Make an authenticated request and verify it succeeds with

<Response [200]>:>>> r = requests.post(predict_url, auth=auth, data=data, verify=True) >>> print(r) <Response [200]>Inspect the response to get your predictions:

>>> print(r.json()) {"predictions": [1]}You can see the short-lived token with:

>>> from rok_common.protect import unprotect_obj >>> unprotect_obj(auth.short_lived_token)