Serve Model from Notebook¶

In this section, you will serve a trained Machine Learning (ML) model using Kale and KServe.

Warning

This API is deprecated. We recommend you to serve a model by first submitting it as an MLMD artifact. Read the Serve SKLearn Models guide to implement this.

Overview

What You’ll Need¶

- An Arrikto EKF or MiniKF deployment with the default Kale Docker image.

Procedure¶

Create a new notebook server using the default Kale Docker image. The image will have the following naming scheme:

gcr.io/arrikto/jupyter-kale-py38:<IMAGE_TAG>Note

The

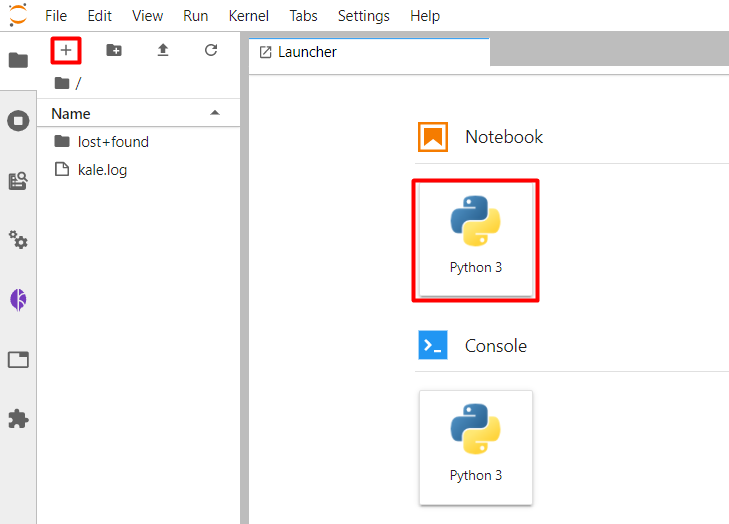

<IMAGE_TAG>varies based on the MiniKF or Arrikto EKF release.Create a new Jupyter notebook (that is, an IPYNB file):

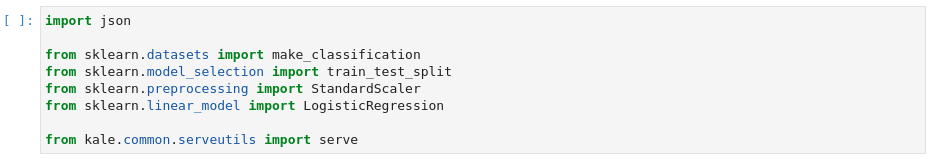

Copy and paste the import statements in the first code cell, then run it:

This is how your notebook cell will look like:

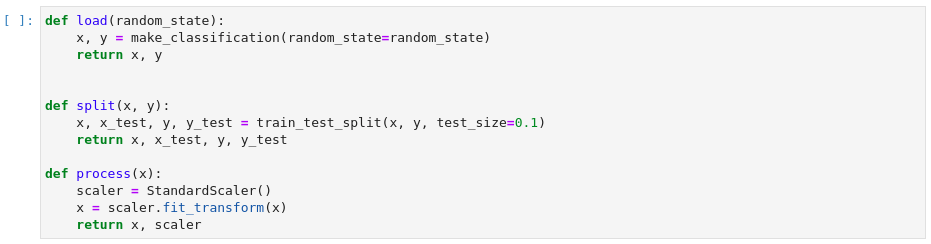

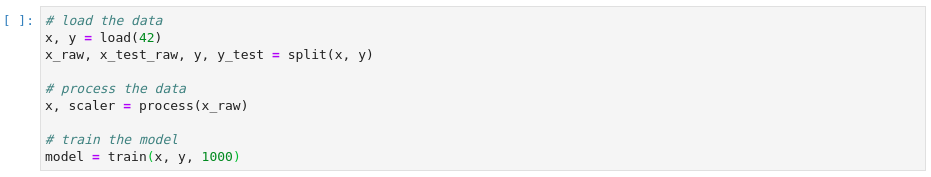

Load, split, and transform your dataset in a different code cell. Then run it:

This is how your notebook cell will look like:

Note

In the

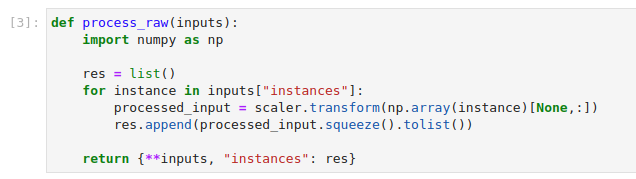

processfunction, we return the processed data as well as thescalerobject. You will need thescalerfor launching a KServe transformer component.Add the data processing function that you want to turn into a KServe transformer component in a different code cell and run it:

This is how your notebook cell will look like:

Note

When KServe feeds your data to the transformer component, it will pass the data one example at a time, as a plain Python list. So, to make this work, you need to cast the example as a NumPy array and expand its dimensions, because the

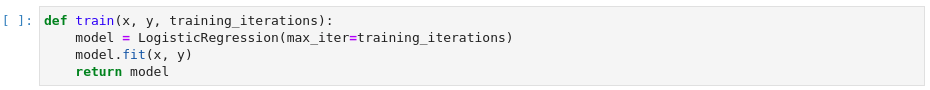

scalerfunction expects a 2D array. Finally, you need to turn it into a Python list and reduce the dimesions again, before returning the processed data.Create a function to train your model in the next code cell and run it:

This is how your notebook cell will look like:

Call the functions to bring everything together in a different code cell and run it:

This is how your notebook cell will look like:

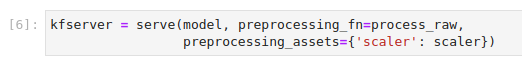

In a different code cell, call the

servefunction and pass the trained model, the preprocessing function, and its dependencies as arguments. Then, run the cell:This is how your notebook cell will look like:

Inside the

servefunction you first pass the model you have trained. This way you are instructing Kale to create a new InferenceService which will serve your model in its predictor component. Kale infers the type of predictor and creates the corresponding service.Moreover, if you pass a preprocessing function (

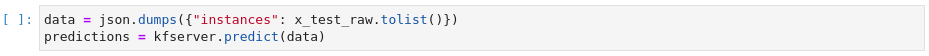

preprocessing_fn) Kale will also include a transformer component for the InferenceService, which will transform your data before passing it to the predictor component. Note that you should explicitly pass any global variable that the preprocessing function depends on as an asset (preprocessing_assets). In this case, the preprocessing function depends on thescalerobject. To this end, we pass a Python dictionary, where the key matches the name of the variable that the preprocessing function depends on and the value is the actual object.Invoke the server to get predictions in a different code cell and run it:

This is how your notebook cell will look like: