Serve XGBoost Models¶

This section will guide you through serving a XGBoost model, using the Kale

serve API.

Overview

What You’ll Need¶

- An Arrikto EKF or MiniKF deployment with the default Kale Docker image.

- An understanding of how the Kale SDK works.

- An understanding of how the Kale serve API works.

Procedure¶

This guide comprises three sections: In the first section, you will explore and process the dataset. Then, in the second section, you will leverage the Kale SDK to build a Machine Learning (ML) pipeline that trains and serves an XGBoost model. Finally, in the third section, you will invoke the model service to get predictions on a holdout test subset.

Explore Dataset¶

In this guide, you will work with the California Housing

dataset. The California Housing dataset records demographic and housing

information about specific districts in California. The end goal is to predict

the median home value in each district.

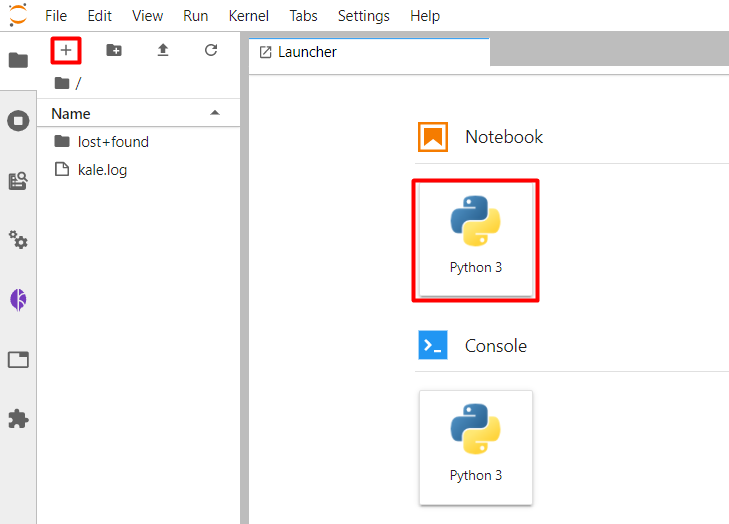

Create a new notebook server using the default Kale Docker image. The image will have the following naming scheme:

gcr.io/arrikto/jupyter-kale-py38:<IMAGE_TAG>Note

The

<IMAGE_TAG>varies based on the MiniKF or Arrikto EKF release.Connect to the Jupyter server and create a new Jupyter notebook (that is, an IPYNB file):

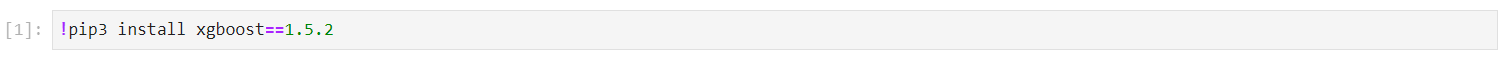

Install the

xgboostlibrary in the first code cell:This is how your notebook cell will look like:

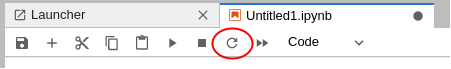

Restart the notebook’s kernel using the corresponding button in the UI:

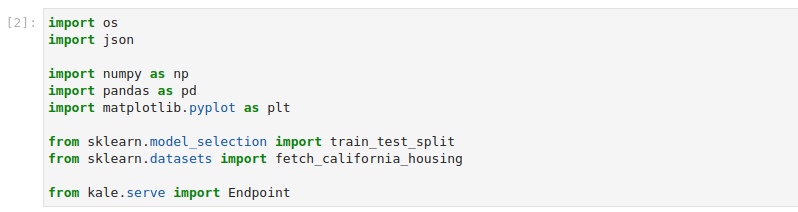

Copy and paste the import statements in the next code cell, and run it:

This is how your notebook cell will look like:

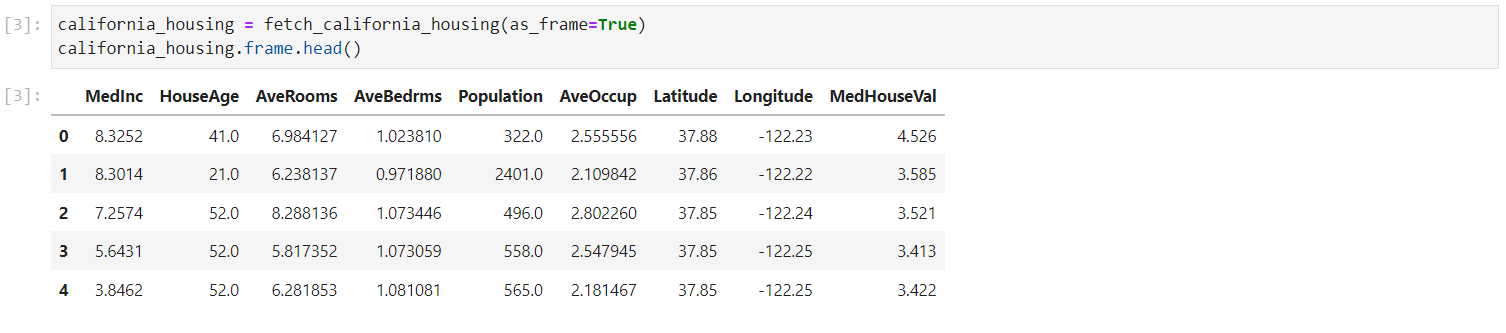

Load and explore the dataset. Copy and paste the following code into a new code cell, and run it:

This is how your notebook cell will look like:

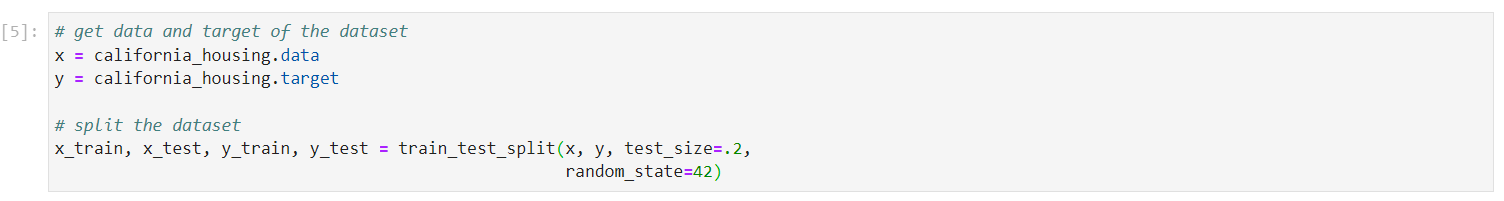

Load the features and targets of the dataset, and split it into training and test subsets. In a new cell, copy and paste the following code, and run it:

This is how your notebook cell will look like:

Serve XGBoost Model¶

In the same notebook server, open a terminal, and create a new Python file. Name it

serve_xgboost_model.py:$ touch serve_xgboost_model.pyCopy and paste the following code inside

serve_xgboost_model.py:xgboost_starter.py1 # Copyright © 2022 Arrikto Inc. All Rights Reserved. 2 3 """Kale SDK. 4 5 This script uses an ML pipeline to train and serve an XGBoost Model. 6 """ 7 8 import xgboost as xgb 9 10 from typing import Tuple 11 12 from sklearn.model_selection import train_test_split 13 from sklearn.datasets import fetch_california_housing 14 15 from kale.types import MarshalData 16 from kale.sdk import pipeline, step 17 18 19 @step(name="data_loading") 20 def load_split_dataset() -> Tuple[MarshalData, MarshalData]: 21 """Fetch California Housing dataset.""" 22 # get data and target of the dataset 23 california_housing = fetch_california_housing() 24 x = california_housing.data 25 y = california_housing.target 26 27 # split the dataset 28 x_train, _, y_train, _ = train_test_split(x, y, test_size=.2, 29 random_state=42) 30 return x_train, y_train 31 32 33 @step(name="model_training") 34 def train(x: MarshalData, y: MarshalData) -> MarshalData: 35 """Train a XGBRegressor model.""" 36 model = xgb.XGBRegressor(objective='reg:squarederror', 37 colsample_bytree=1, 38 eta=0.3, 39 learning_rate=0.1, 40 max_depth=5, 41 alpha=10, 42 n_estimators=2000) 43 model.fit(x, y) 44 return model 45 46 47 @pipeline(name="regression", experiment="xgboost-tutorial") 48 def ml_pipeline(): 49 """Run the ML pipeline.""" 50 x_train, y_train = load_split_dataset() 51 train(x_train, y_train) 52 53 54 if __name__ == "__main__": 55 ml_pipeline() This script defines a KFP run using the Kale SDK. Specifically, it defines a pipeline with two steps:

- The first step (

data_loading) loads and splits theCalifornia Housingdataset. - The second step (

model_training) trains aXGBRegressor.

- The first step (

Create a new function which logs a

Transformerartifact, using the Kale API:xgboost_transformer.py1 # Copyright © 2022 Arrikto Inc. All Rights Reserved. 2 3 """Kale SDK. 4-13 4 5 This script uses an ML pipeline to train and serve an XGBoost Model. 6 """ 7 8 import xgboost as xgb 9 10 from typing import Tuple 11 12 from sklearn.model_selection import train_test_split 13 from sklearn.datasets import fetch_california_housing 14 15 from kale.types import MarshalData 16 from kale.sdk import pipeline, step 17 + from kale.common import mlmdutils, artifacts 18 + 19 + 20 + def _postprocess(inputs): 21 + """Postprocess the prediction value.""" 22 + value = inputs["outputs"][0]["data"][0] 23 + inputs["outputs"][0]["data"][0] = round(value * 100000, 2) 24 + return inputs 25 26 27 @step(name="data_loading") 28-35 28 def load_split_dataset() -> Tuple[MarshalData, MarshalData]: 29 """Fetch California Housing dataset.""" 30 # get data and target of the dataset 31 california_housing = fetch_california_housing() 32 x = california_housing.data 33 y = california_housing.target 34 35 # split the dataset 36 x_train, _, y_train, _ = train_test_split(x, y, test_size=.2, 37 random_state=42) 38 return x_train, y_train 39 + 40 + 41 + @step(name="register_transformer") 42 + def register_transformer() -> int: 43 + """Register a Transformer artifact.""" 44 + # create and submit a Transformer artifact 45 + mlmd = mlmdutils.get_mlmd_instance() 46 + 47 + transformer_artifact = artifacts.Transformer( 48 + name="Transformer", 49 + postprocess_fn=_postprocess 50 + ).submit_artifact() 51 + 52 + mlmd.link_artifact_as_output(transformer_artifact.id) 53 + 54 + return transformer_artifact.id 55 56 57 @step(name="model_training") 58-71 58 def train(x: MarshalData, y: MarshalData) -> MarshalData: 59 """Train a XGBRegressor model.""" 60 model = xgb.XGBRegressor(objective='reg:squarederror', 61 colsample_bytree=1, 62 eta=0.3, 63 learning_rate=0.1, 64 max_depth=5, 65 alpha=10, 66 n_estimators=2000) 67 model.fit(x, y) 68 return model 69 70 71 @pipeline(name="regression", experiment="xgboost-tutorial") 72 def ml_pipeline(): 73 """Run the ML pipeline.""" 74 x_train, y_train = load_split_dataset() 75 + register_transformer() 76 train(x_train, y_train) 77 78 79 if __name__ == "__main__": 80 ml_pipeline() The

Transformerartifact will create a serving transformer component which will postprocess the predictions of a trained model. In this case, it will express the median price of each district in hundreds of thousands of dollars ($100,000).Create a new step function which logs an

XGBoostModelartifact, using the Kale API. The following snippet summarizes the changes in code:Important

Running these pipelines locally won’t work. After introducing

register_modelstep, run the pipeline as a KFP pipeline, since this step creates a Kubeflow artifact.xgboost_log_model_artifact.py1 # Copyright © 2022 Arrikto Inc. All Rights Reserved. 2 3 """Kale SDK. 4-11 4 5 This script uses an ML pipeline to train and serve an XGBoost Model. 6 """ 7 8 import xgboost as xgb 9 10 from typing import Tuple 11 12 from sklearn.model_selection import train_test_split 13 from sklearn.datasets import fetch_california_housing 14 15 + from kale.ml import Signature 16 from kale.types import MarshalData 17 from kale.sdk import pipeline, step 18 from kale.common import mlmdutils, artifacts 19-68 19 20 21 def _postprocess(inputs): 22 """Postprocess the prediction value.""" 23 value = inputs["outputs"][0]["data"][0] 24 inputs["outputs"][0]["data"][0] = round(value * 100000, 2) 25 return inputs 26 27 28 @step(name="data_loading") 29 def load_split_dataset() -> Tuple[MarshalData, MarshalData]: 30 """Fetch California Housing dataset.""" 31 # get data and target of the dataset 32 california_housing = fetch_california_housing() 33 x = california_housing.data 34 y = california_housing.target 35 36 # split the dataset 37 x_train, _, y_train, _ = train_test_split(x, y, test_size=.2, 38 random_state=42) 39 return x_train, y_train 40 41 42 @step(name="register_transformer") 43 def register_transformer() -> int: 44 """Register a Transformer artifact.""" 45 # create and submit a Transformer artifact 46 mlmd = mlmdutils.get_mlmd_instance() 47 48 transformer_artifact = artifacts.Transformer( 49 name="Transformer", 50 postprocess_fn=_postprocess 51 ).submit_artifact() 52 53 mlmd.link_artifact_as_output(transformer_artifact.id) 54 55 return transformer_artifact.id 56 57 58 @step(name="model_training") 59 def train(x: MarshalData, y: MarshalData) -> MarshalData: 60 """Train a XGBRegressor model.""" 61 model = xgb.XGBRegressor(objective='reg:squarederror', 62 colsample_bytree=1, 63 eta=0.3, 64 learning_rate=0.1, 65 max_depth=5, 66 alpha=10, 67 n_estimators=2000) 68 model.fit(x, y) 69 return model 70 71 72 + @step(name="register_model") 73 + def register_model(model: MarshalData, x: MarshalData, y: MarshalData) -> int: 74 + mlmd = mlmdutils.get_mlmd_instance() 75 + 76 + signature = Signature( 77 + input_size=[1] + list(x[0].shape), 78 + output_size=[1] + list(y[0].shape), 79 + input_dtype=x.dtype, 80 + output_dtype=y.dtype) 81 + 82 + model_artifact = artifacts.XGBoostModel( 83 + model=model, 84 + description="A simple XGBRegressor model", 85 + version="1.0.0", 86 + author="Kale", 87 + signature=signature, 88 + tags={"app": "xgboost-tutorial"}).submit_artifact() 89 + 90 + mlmd.link_artifact_as_output(model_artifact.id) 91 + return model_artifact.id 92 + 93 + 94 @pipeline(name="regression", experiment="xgboost-tutorial") 95 def ml_pipeline(): 96 """Run the ML pipeline.""" 97 x_train, y_train = load_split_dataset() 98 register_transformer() 99 - train(x_train, y_train) 100 + model = train(x_train, y_train) 101 + register_model(model, x_train, y_train) 102 103 104 if __name__ == "__main__": 105 ml_pipeline() Create a new step function which serves the

XGBoostModelartifact you created in the previous step, using the KaleserveAPI. The following snippet summarizes the changes in code:xgboost_serve.py1 # Copyright © 2022 Arrikto Inc. All Rights Reserved. 2 3 """Kale SDK. 4-11 4 5 This script uses an ML pipeline to train and serve an XGBoost Model. 6 """ 7 8 import xgboost as xgb 9 10 from typing import Tuple 11 12 from sklearn.model_selection import train_test_split 13 from sklearn.datasets import fetch_california_housing 14 15 + from kale.serve import serve 16 from kale.ml import Signature 17 from kale.types import MarshalData 18 from kale.sdk import pipeline, step 19-91 19 from kale.common import mlmdutils, artifacts 20 21 22 def _postprocess(inputs): 23 """Postprocess the prediction value.""" 24 value = inputs["outputs"][0]["data"][0] 25 inputs["outputs"][0]["data"][0] = round(value * 100000, 2) 26 return inputs 27 28 29 @step(name="data_loading") 30 def load_split_dataset() -> Tuple[MarshalData, MarshalData]: 31 """Fetch California Housing dataset.""" 32 # get data and target of the dataset 33 california_housing = fetch_california_housing() 34 x = california_housing.data 35 y = california_housing.target 36 37 # split the dataset 38 x_train, _, y_train, _ = train_test_split(x, y, test_size=.2, 39 random_state=42) 40 return x_train, y_train 41 42 43 @step(name="register_transformer") 44 def register_transformer() -> int: 45 """Register a Transformer artifact.""" 46 # create and submit a Transformer artifact 47 mlmd = mlmdutils.get_mlmd_instance() 48 49 transformer_artifact = artifacts.Transformer( 50 name="Transformer", 51 postprocess_fn=_postprocess 52 ).submit_artifact() 53 54 mlmd.link_artifact_as_output(transformer_artifact.id) 55 56 return transformer_artifact.id 57 58 59 @step(name="model_training") 60 def train(x: MarshalData, y: MarshalData) -> MarshalData: 61 """Train a XGBRegressor model.""" 62 model = xgb.XGBRegressor(objective='reg:squarederror', 63 colsample_bytree=1, 64 eta=0.3, 65 learning_rate=0.1, 66 max_depth=5, 67 alpha=10, 68 n_estimators=2000) 69 model.fit(x, y) 70 return model 71 72 73 @step(name="register_model") 74 def register_model(model: MarshalData, x: MarshalData, y: MarshalData) -> int: 75 mlmd = mlmdutils.get_mlmd_instance() 76 77 signature = Signature( 78 input_size=[1] + list(x[0].shape), 79 output_size=[1] + list(y[0].shape), 80 input_dtype=x.dtype, 81 output_dtype=y.dtype) 82 83 model_artifact = artifacts.XGBoostModel( 84 model=model, 85 description="A simple XGBRegressor model", 86 version="1.0.0", 87 author="Kale", 88 signature=signature, 89 tags={"app": "xgboost-tutorial"}).submit_artifact() 90 91 mlmd.link_artifact_as_output(model_artifact.id) 92 return model_artifact.id 93 94 95 + @step(name="serve_model") 96 + def serve_model(model_id: int, tranformer_id: int): 97 + serve_config = {"limits": {"memory": "4Gi"}, 98 + "protocol_version": "v2", 99 + "predictor": {"runtime": "kserve-mlserver"}} 100 + serve(name="xgboost-tutorial", model_id=model_id, 101 + transformer_id=tranformer_id, serve_config=serve_config) 102 + 103 + 104 @pipeline(name="regression", experiment="xgboost-tutorial") 105 def ml_pipeline(): 106 """Run the ML pipeline.""" 107 x_train, y_train = load_split_dataset() 108 - register_transformer() 109 + transformer_id = register_transformer() 110 model = train(x_train, y_train) 111 - register_model(model, x_train, y_train) 112 + model_id = register_model(model, x_train, y_train) 113 + serve_model(model_id, transformer_id) 114 115 116 if __name__ == "__main__": 117 ml_pipeline() Deploy and run your code as a KFP pipeline:

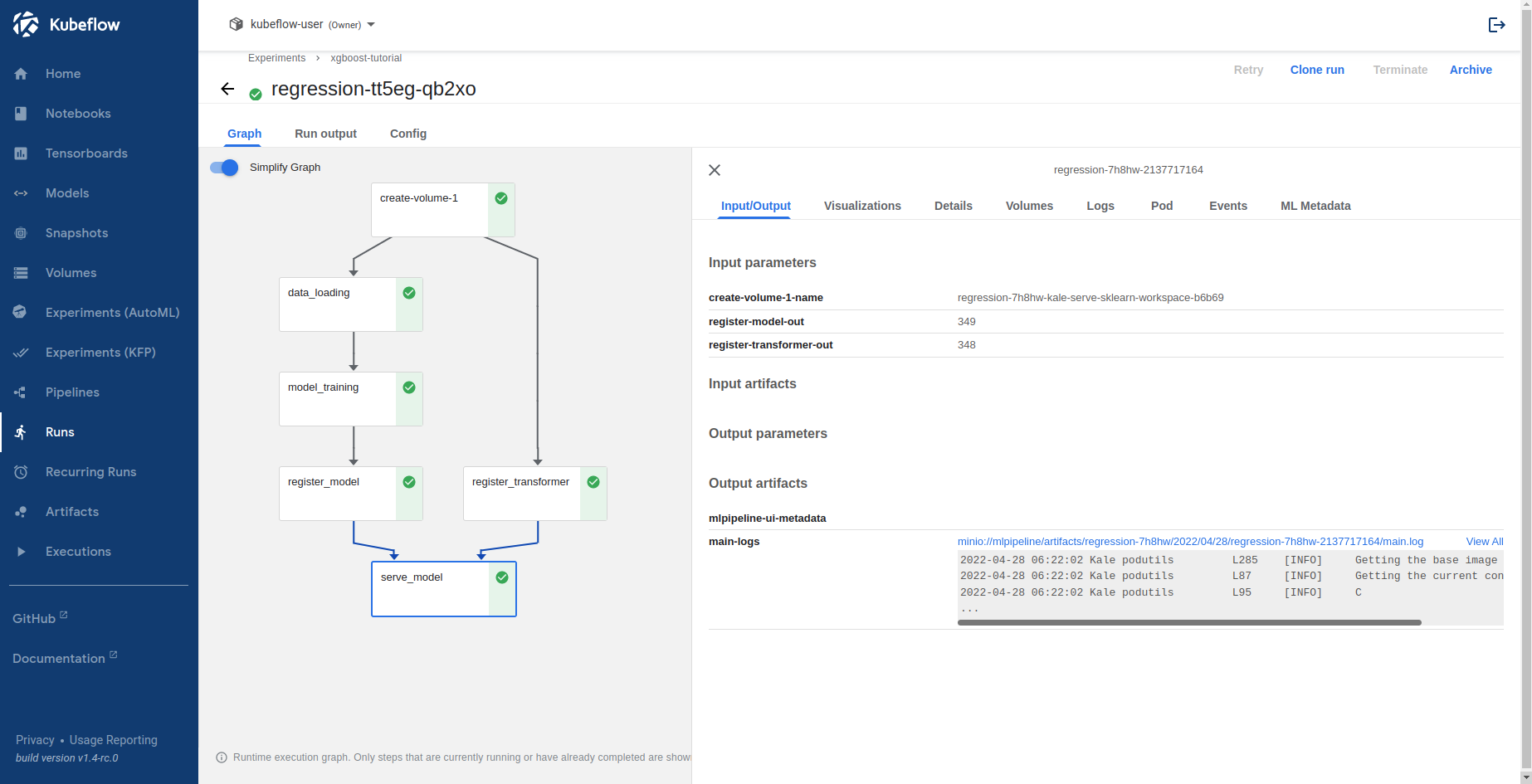

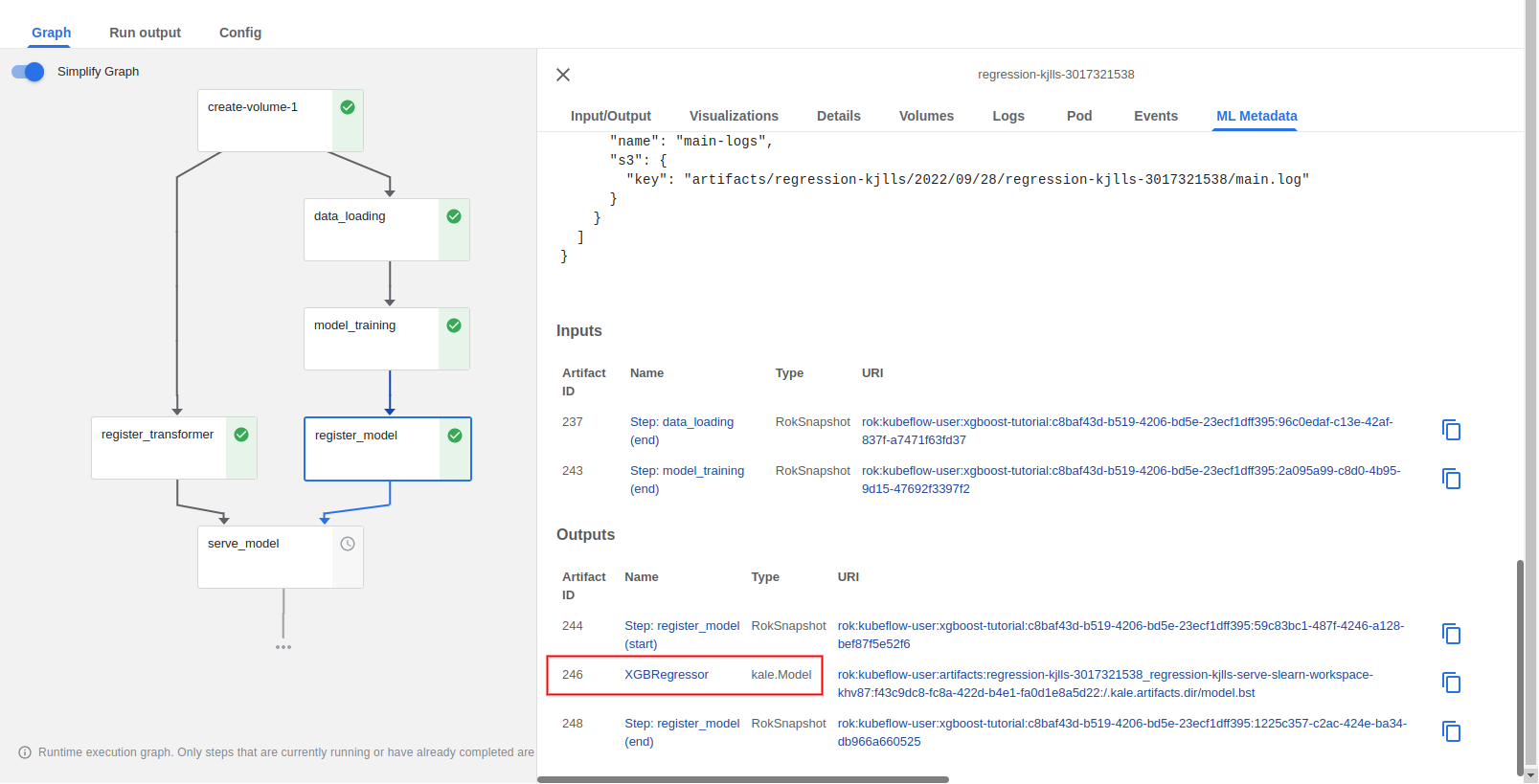

$ python3 -m kale serve_xgboost_model.py --kfpSelect Runs to view the KFP run you just created. This is what it looks like when the pipeline completes successfully:

When the

register_modelstep completes, you can view the model artifact through the KFP UI:

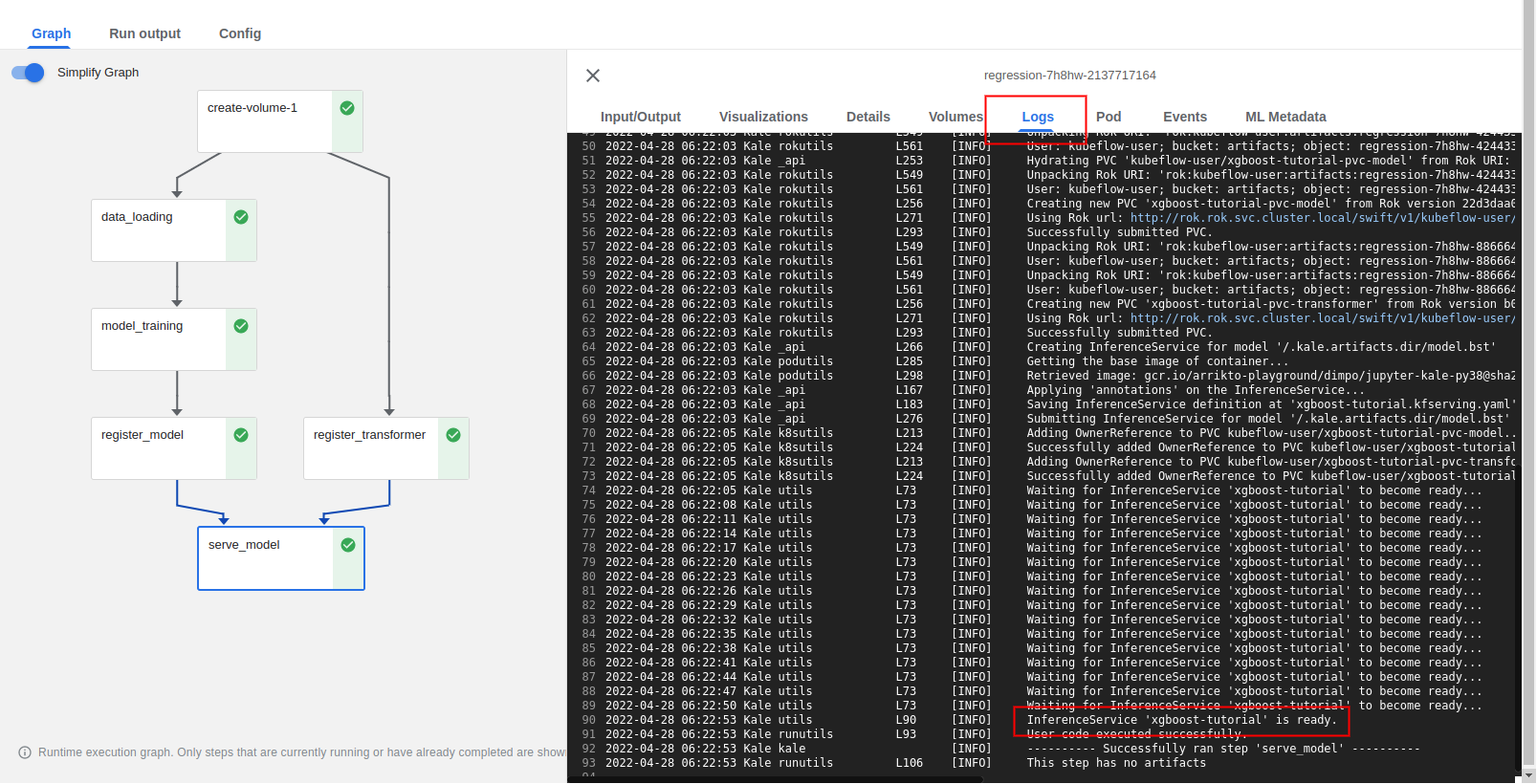

Wait until the pipeline completes. Check the Logs tab of the

serve_modelstep to see whether theInferenceServiceis running.

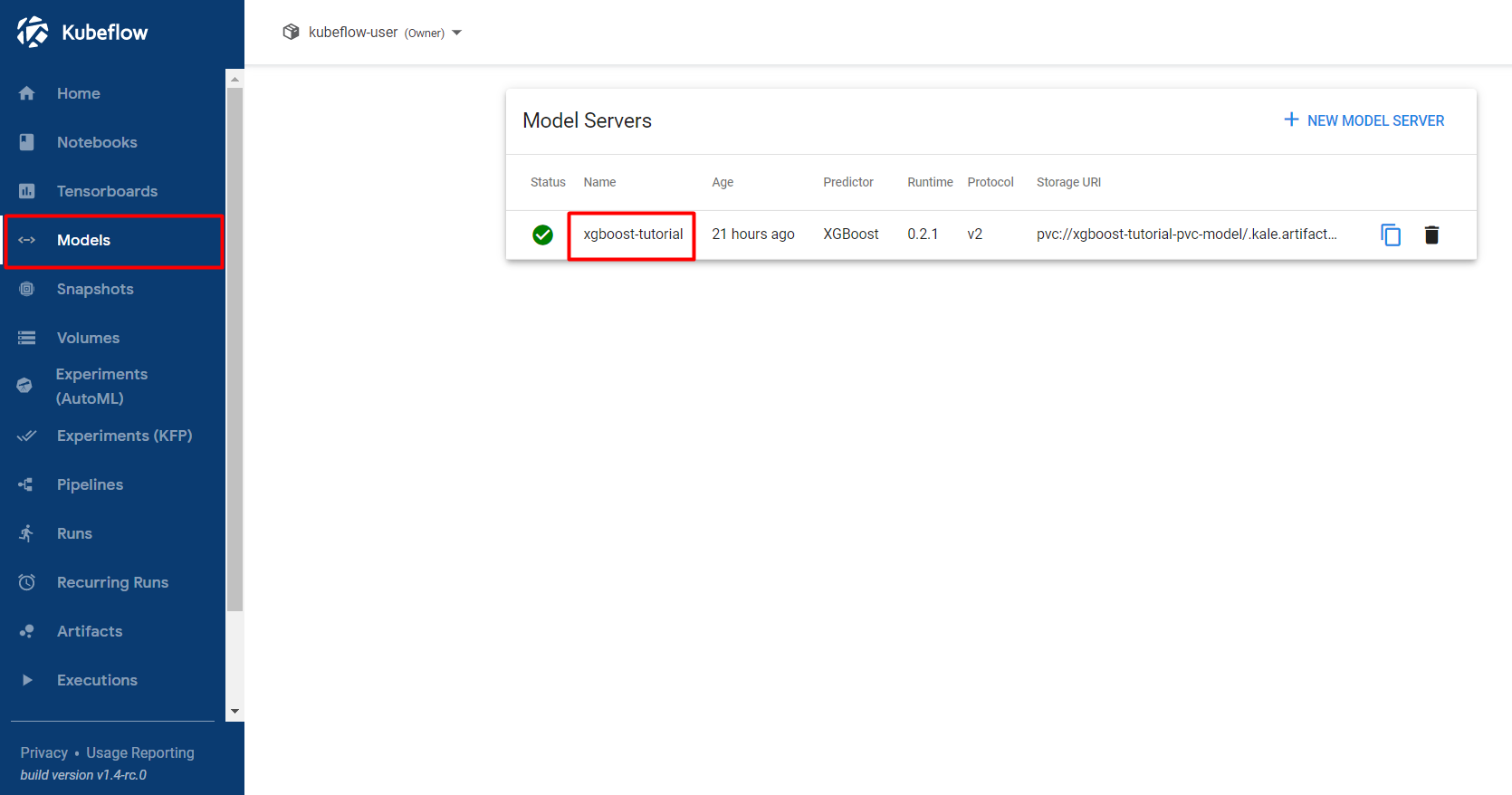

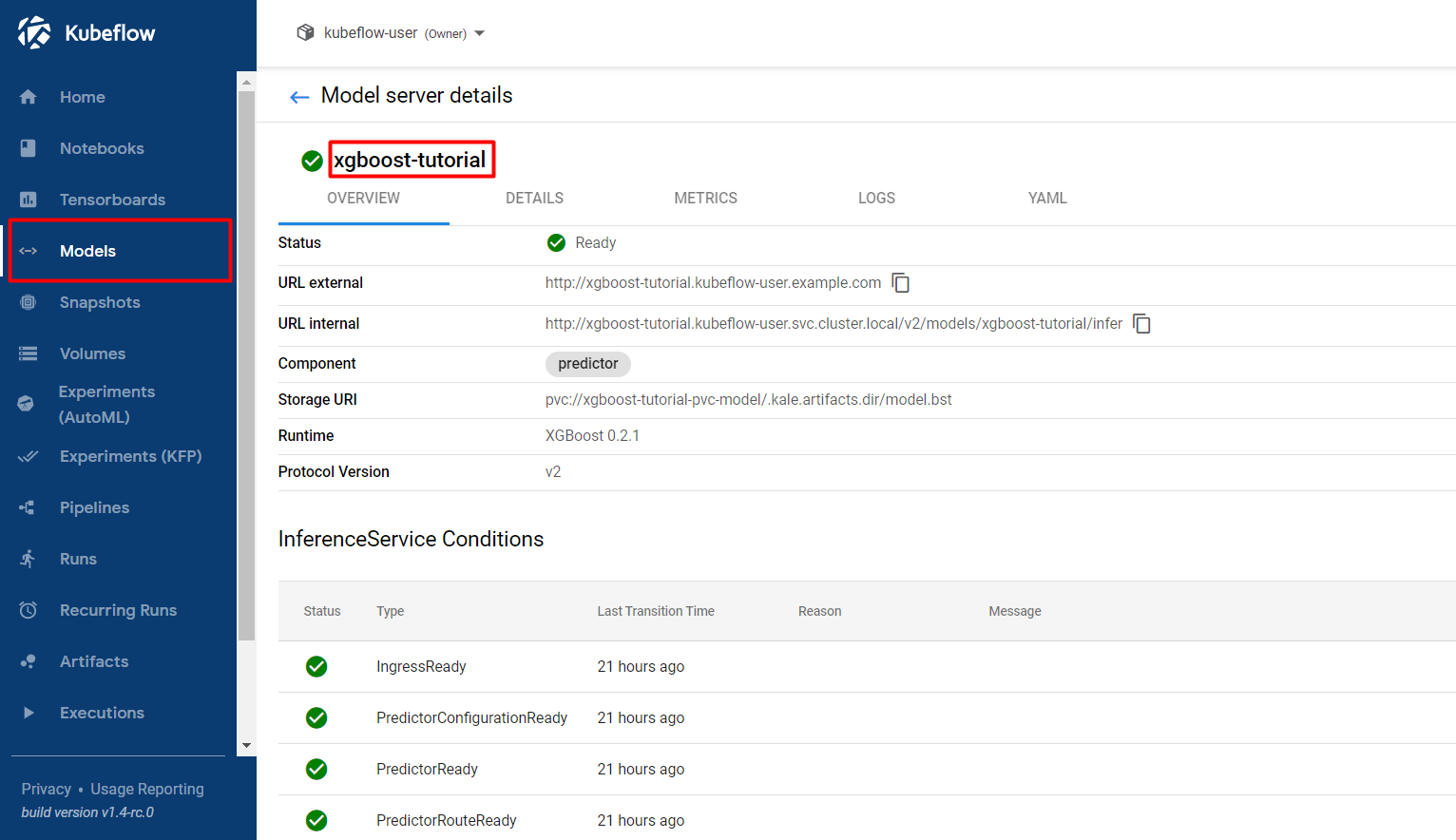

Select Models and click on the endpoint you created:

Get Predictions¶

In this section, you will query the model endpoint to get predictions for the examples in the validation subset.

Navigate to the Models UI to retrieve the name of the

InferenceService. In this example, it isxgboost-tutorial.

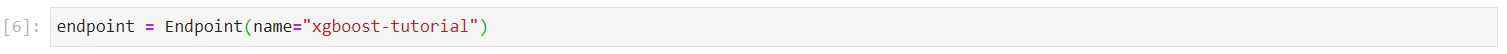

In the existing notebook, in a different code cell, initialize a Kale

Endpointobject using the name of theInferenceServiceyou retrieved in the previous step. Then, run the cell:Note

When initializing an

Endpoint, you can also pass the namespace of theInferenceService. For example, if your namespace ismy-namespace:If you do not provide one, Kale assumes the namespace of the notebook server. In our case is

kubeflow-user.This is how your notebook cell will look like:

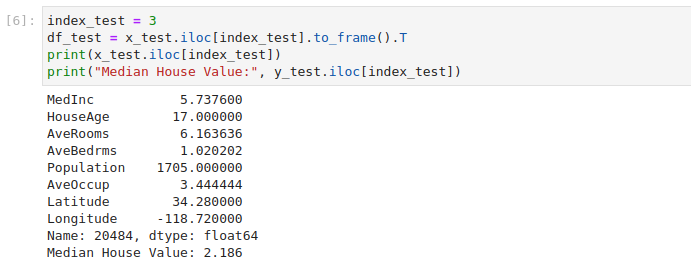

See a test sample and convert it into JSON format:

This is how your notebook cell will look like:

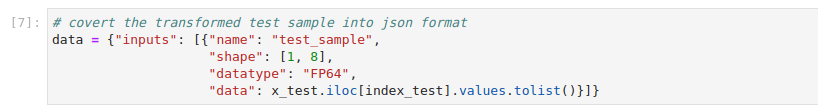

Prepare the data payload for the prediction request. Copy and paste the following code in a new cell, and run it:

This is how your notebook cell will look like:

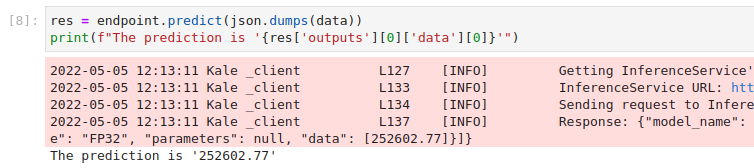

Invoke the server to get predictions. Copy and paste the following snippet in a different code cell, and run it:

This is how your notebook cell will look like: