Configure Specs for Model¶

In this section, you will configure the underlying Kubernetes objects as well

as the InferenceService Kale will create to serve a trained Machine Learning

(ML) model. You will train a SKLearn model, configure the Kubernetes and

InferenceService specs, serve the trained model, and get predictions.

Overview

What You’ll Need¶

- An Arrikto EKF or MiniKF deployment with the default Kale Docker image.

- An understanding of how you can serve a model with Kale.

Procedure¶

Create a new notebook server using the default Kale Docker image. The image will have the following naming scheme:

gcr.io/arrikto/jupyter-kale-py38:<IMAGE_TAG>Note

The

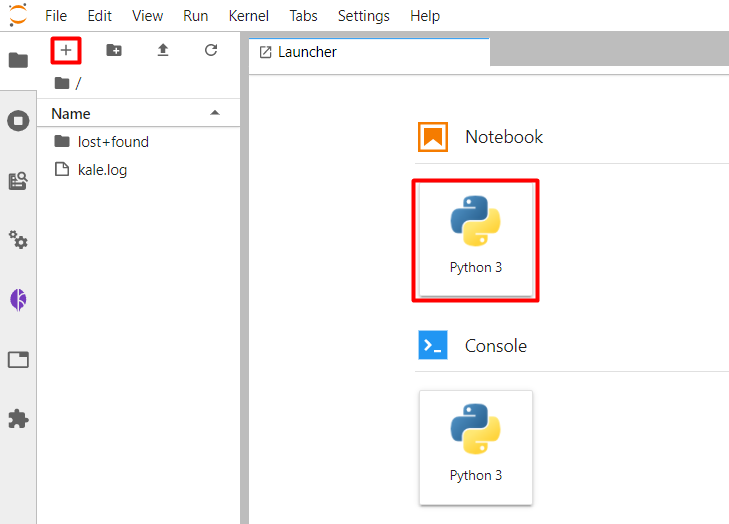

<IMAGE_TAG>varies based on the MiniKF or Arrikto EKF release.Create a new Jupyter notebook (that is, an IPYNB file):

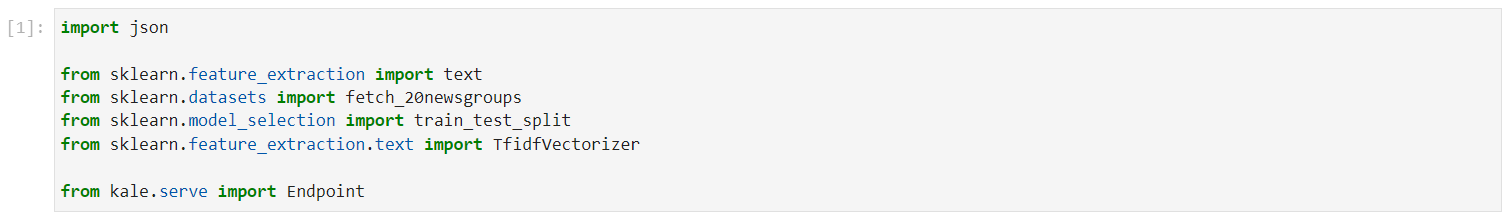

Copy and paste the import statements in the first code cell, and run it:

This is how your notebook cell will look like:

In a different code cell, fetch the dataset and print the topic names. Copy and paste the following code, and run it:

This is how your notebook cell will look like:

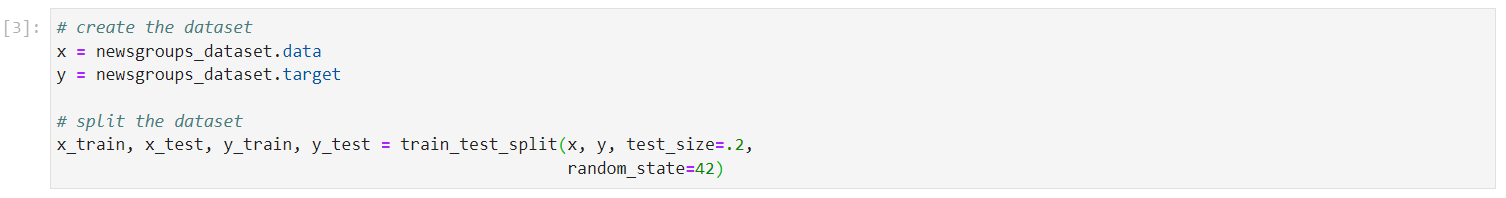

Load the features and targets of the dataset, and split it into train and test subsets. In a new cell, copy and paste the following code, and run it:

This is how your notebook cell will look like:

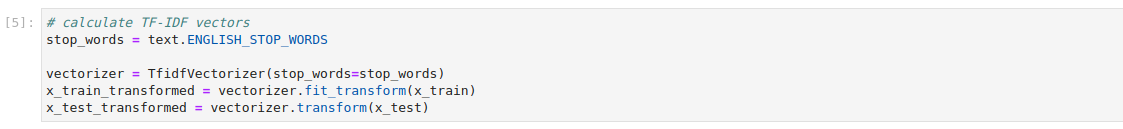

Use the TF-IDF vectorizer to transform the raw training and test subsets into a form that you can use to train a machine learning model:

This is how your notebook cell will look like:

In the same notebook server, open a terminal, and create a new Python file. Name it

serve_model.py:$ touch serve_model.pyCopy and paste the following code inside

serve_model.py:serve_model.py1 # Copyright © 2022 Arrikto Inc. All Rights Reserved. 2 3 """Kale SDK. 4 5 This script uses an ML pipeline to train and serve an SKLearn Model. 6 """ 7 8 from kale.ml import Signature 9 from kale.sdk import pipeline, step 10 from kale.common import mlmdutils, artifacts 11 12 from sklearn.feature_extraction import text 13 from sklearn.naive_bayes import MultinomialNB 14 from sklearn.datasets import fetch_20newsgroups 15 from sklearn.model_selection import train_test_split 16 from sklearn.feature_extraction.text import TfidfVectorizer 17 18 19 @step(name="data_loading") 20 def load_split_dataset(): 21 """Fetch 20newgroup dataset.""" 22 # load the data 23 newsgroups_dataset = fetch_20newsgroups(random_state=42) 24 x = newsgroups_dataset.data 25 y = newsgroups_dataset.target 26 27 # split the dataset 28 x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=.2, 29 random_state=42) 30 return x_train, y_train, x_test, y_test 31 32 33 @step(name="data_preprocess") 34 def preprocess(x_train, x_test): 35 """Preprocess the input data.""" 36 # get stopwords 37 stop_words = text.ENGLISH_STOP_WORDS 38 # TF-IDF vectors 39 vectorizer = TfidfVectorizer(stop_words=stop_words) 40 vectors_train = vectorizer.fit_transform(x_train) 41 vectors_test = vectorizer.transform(x_test) 42 return vectors_train, vectors_test 43 44 45 @step(name="model_training") 46 def train(x, y): 47 """Train a MultinomialNB model.""" 48 classifier = MultinomialNB(alpha=.01) 49 model = classifier.fit(x, y) 50 return model 51 52 53 @step(name="register_model") 54 def register_model(model, x, y): 55 mlmd = mlmdutils.get_mlmd_instance() 56 57 signature = Signature( 58 input_size=[1] + list(x[0].shape), 59 output_size=[1] + list(y[0].shape), 60 input_dtype=x.dtype, 61 output_dtype=y.dtype) 62 63 model_artifact = artifacts.SklearnModel( 64 model=model, 65 description="A simple MultinomialNB model", 66 version="1.0.0", 67 author="Kale", 68 signature=signature, 69 tags={"app": "sklearn-model"}).submit_artifact() 70 71 mlmd.link_artifact_as_output(model_artifact.id) 72 return model_artifact.id 73 74 75 @pipeline(name="classification", experiment="sklearn-model") 76 def ml_pipeline(): 77 """Run the ML pipeline.""" 78 x_train, y_train, x_test, y_test = load_split_dataset() 79 vectors_train, vectors_test = preprocess(x_train, x_test) 80 model = train(vectors_train, y_train) 81 register_model(model, vectors_train, y_train) 82 83 84 if __name__ == "__main__": 85 ml_pipeline() Create a new step function which specifies your desired Kubernetes and

InferenceServiceconfigurations, and serves the trained model:serve_model_spec.py1 # Copyright © 2022 Arrikto Inc. All Rights Reserved. 2 3 """Kale SDK. 4-5 4 5 This script uses an ML pipeline to train and serve an SKLearn Model. 6 """ 7 8 from kale.ml import Signature 9 + from kale.serve import serve 10 from kale.sdk import pipeline, step 11 from kale.common import mlmdutils, artifacts 12 13-72 13 from sklearn.feature_extraction import text 14 from sklearn.naive_bayes import MultinomialNB 15 from sklearn.datasets import fetch_20newsgroups 16 from sklearn.model_selection import train_test_split 17 from sklearn.feature_extraction.text import TfidfVectorizer 18 19 20 @step(name="data_loading") 21 def load_split_dataset(): 22 """Fetch 20newgroup dataset.""" 23 # load the data 24 newsgroups_dataset = fetch_20newsgroups(random_state=42) 25 x = newsgroups_dataset.data 26 y = newsgroups_dataset.target 27 28 # split the dataset 29 x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=.2, 30 random_state=42) 31 return x_train, y_train, x_test, y_test 32 33 34 @step(name="data_preprocess") 35 def preprocess(x_train, x_test): 36 """Preprocess the input data.""" 37 # get stopwords 38 stop_words = text.ENGLISH_STOP_WORDS 39 # TF-IDF vectors 40 vectorizer = TfidfVectorizer(stop_words=stop_words) 41 vectors_train = vectorizer.fit_transform(x_train) 42 vectors_test = vectorizer.transform(x_test) 43 return vectors_train, vectors_test 44 45 46 @step(name="model_training") 47 def train(x, y): 48 """Train a MultinomialNB model.""" 49 classifier = MultinomialNB(alpha=.01) 50 model = classifier.fit(x, y) 51 return model 52 53 54 @step(name="register_model") 55 def register_model(model, x, y): 56 mlmd = mlmdutils.get_mlmd_instance() 57 58 signature = Signature( 59 input_size=[1] + list(x[0].shape), 60 output_size=[1] + list(y[0].shape), 61 input_dtype=x.dtype, 62 output_dtype=y.dtype) 63 64 model_artifact = artifacts.SklearnModel( 65 model=model, 66 description="A simple MultinomialNB model", 67 version="1.0.0", 68 author="Kale", 69 signature=signature, 70 tags={"app": "sklearn-model"}).submit_artifact() 71 72 mlmd.link_artifact_as_output(model_artifact.id) 73 return model_artifact.id 74 75 76 + @step(name="serve_model") 77 + def serve_model(model_artifact_id): 78 + serve_config = {"limits": {"cpu": 1, "memory": "4Gi"}, 79 + "requests": {"cpu": "100m", "memory": "3Gi"}, 80 + "labels": {"my-sklearn-model": "logistic-regression"}, 81 + "annotations": {"sidecar.istio.io/inject": "false"}, 82 + "protocol_version": "v1"} 83 + serve(name="sklearn-model", 84 + model_id=model_artifact_id, 85 + serve_config=serve_config) 86 + 87 + 88 @pipeline(name="classification", experiment="sklearn-model") 89 def ml_pipeline(): 90 """Run the ML pipeline.""" 91 x_train, y_train, x_test, y_test = load_split_dataset() 92 vectors_train, vectors_test = preprocess(x_train, x_test) 93 model = train(vectors_train, y_train) 94 - register_model(model, vectors_train, y_train) 95 + artifact_id = register_model(model, vectors_train, y_train) 96 + serve_model(artifact_id) 97 98 99 if __name__ == "__main__": 100 ml_pipeline() See also

- Check out all the available configurations you can specify.

Important

The KServe controller uses some default values for

limitsandrequeststhat the admin sets, unless you explicitly specify them. At the same time, Kubernetes will not schedule Pods when theirrequestsexceed theirlimits.To ensure that the resulting resources will have valid specs, whenever you set either one of

limitsorrequestsmake sure to specify some value for the other one as well, following the aforementioned restriction. If you provide a value just forrequestswhich exceeds the defaultlimits, Kubernetes will not schedule the resulting Pods.The

protocol_versionattribute sets the protocol version for anInferenceServicecreated for a SKLearn predictor, whereas the other attributes set configurations for the underlying Kubernetes objects.The

serve_configargument accepts either adict, which Kale will use to initialize aServeConfigobject, or aServeConfigdirectly.Deploy and run your code as a KFP pipeline:

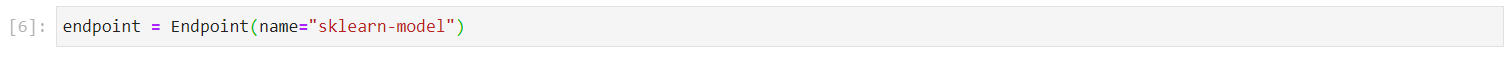

$ python3 -m kale serve_model.py --kfpIn the existing notebook, in a different code cell, initialize a Kale

Endpointobject using the name of theInferenceServiceyou created. Then, run the cell:Note

When initializing an

Endpoint, you can also pass the namespace of theInferenceService. If you do not provide one, Kale assumes the namespace of the notebook server.This is how your notebook cell will look like:

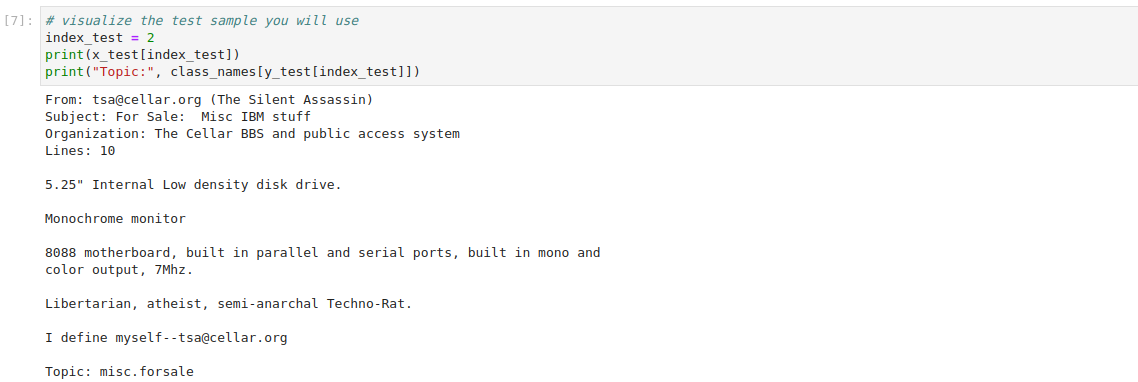

Visualize a test sample and transform the data into JSON format. Copy and paste the following code in a new cell, and run it:

This is how your notebook cell will look like:

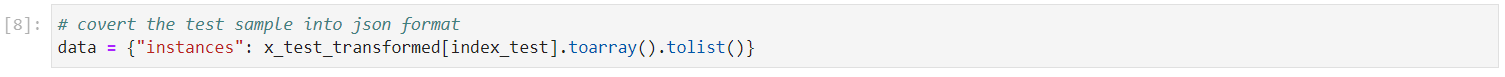

Prepare the data payload for the prediction request. Copy and paste the following code in a new cell, and run it:

This is how your notebook cell will look like:

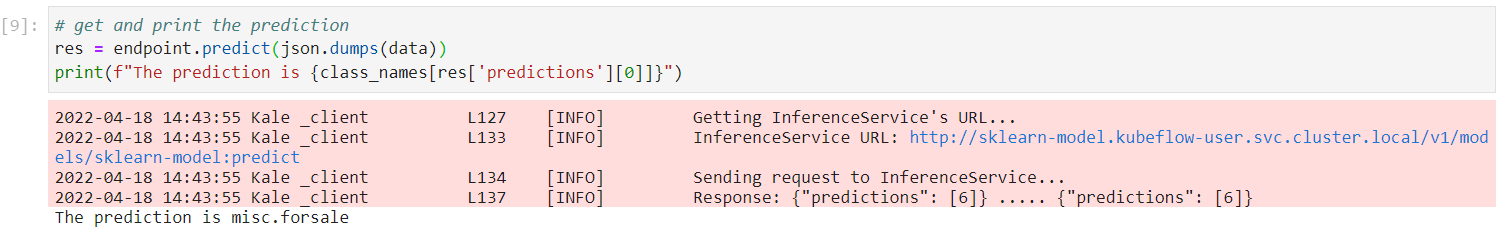

Invoke the server to get predictions. Copy and paste the following snippet in a different code cell, and run it:

This is how your notebook cell will look like:

Summary¶

You have successfully served and invoked a model with Kale, specifying custom

Kubernetes and InferenceService configurations for the corresponding

resources.

What’s Next¶

Check out how you can invoke an already deployed model.